Generally speaking, one question is the degree to which autonomous robots raise issues our present conceptual schemes must adapt to, or whether they just require technical adjustments.

In most jurisdictions, there is a sophisticated system of civil and criminal liability to resolve such issues.

Technical standards, e.g., for the safe use of machinery in medical environments, will likely need to be adjusted. There is already a field of “verifiable AI” for such safety-critical systems and for “security applications”.

Bodies like the IEEE (The Institute of Electrical and Electronics Engineers) and the BSI (British Standards Institution) have produced “standards”, particularly on more technical sub-problems, such as data security and transparency.

Among the many autonomous systems on land, on water, underwater, in the air, or space, we discuss two samples: autonomous vehicles and autonomous weapons.

After the Introduction to the field, the main themes of this article are Ethical issues that arise with AI systems as objects, i.e., tools made and used by humans.

This includes issues of privacy and manipulation, opacity and bias, human-robot interaction, employment, and the effects of autonomy.

Then AI systems as subjects, i.e., ethics for the AI systems themselves in machine ethics and artificial moral agency.

Finally, the problem of a possible future AI superintelligence leads to a “singularity”. We close with a remark on the vision of AI.

In the future, AI is expected to simplify and accelerate pharmaceutical development.

AI can convert drug discovery from a labor-intensive to capital- and data-intensive process by utilizing robotics and models of genetic targets, drugs, organs, diseases and their progression, pharmacokinetics, safety, and efficacy.

Artificial intelligence (AI) can be used in the drug discovery and development process to speed up and make it more cost-effective and efficient.

Although like with any drug study, identifying a lead molecule does not guarantee the development of a safe and successful therapy, AI was used to identify potential Ebola virus medicines previously.

The report also encourages companies to “improve their communications around AI, so that people feel that they are part of its development and not its passive recipients or even victims”.

For this to be achieved, “employees and other stakeholders need to be empowered to take personal responsibility for the consequences of their use of AI, and they need to be provided with the skills to do so”.

Many advertisers, marketers, and online sellers will use any legal means at their disposal to maximize profit, including exploitation of behavioral biases, deception, and addiction generation (Costa and Halpern 2019 ).

Such manipulation is the business model in much of the gambling and gaming industries, but it is spreading, e.g., to low-cost airlines.

In interface design on web pages or games, this manipulation uses what is called “dark patterns” (Mathur et al. 2019).

At this moment, gambling and the sale of addictive substances are highly regulated, but online manipulation and addiction are not—even though the manipulation of online behavior is becoming a core business model of the Internet.

On the plus side, the good thing about AI and machine learning (ML) models is that the data set they’re trained on can be modified, and with enough effort invested, they can become largely unbiased.

In contrast, it is not feasible to let people make completely unbiased decisions on a large scale.

But if the people whose tasks are replaced by AI lose their jobs rather than being promoted to higher-level work, the implications for society can be ominous.

If people who perform repetitive tasks across multiple professions and industries all lose their jobs instead of being promoted, the implementation of AI could leave many people without options for work and damage their lives and the economy.

Unlike doctors, technologists are not obligated by law to be accountable for their actions; instead, ethical principles of practice are applied in this sector.

This comparison summarizes the dispute over whether technologists should be held accountable if AIS is used in a healthcare context and directly affects patients.

If a clinician can’t account for the output of the AIS they’re employing, they won’t be able to appropriately justify their actions if they choose to use that data.

This lack of accountability raises concerns about the possible safety consequences of using unverified or unvalidated AISs in clinical settings. Some scenarios show how opacity affects each stakeholder.

It is indeed a challenging aspect of technology.

We think that a new framework and approach are needed for approval of AI systems but practitioners and hospitals using it need to be trained and hence have the ultimate responsibility of its use.

Medical devices based AI will facilitate the decision-making to carry out treatment and procedures by the individuals, and not replace them in entirety.

There is a dearth of literature in this regard and a detailed framework needs to be developed by the highest bodies of policymakers.

Both the structural characteristics of the models as well as the machine learning process itself make these new AI technologies different from previous approaches to prognostication.

The lack of explicit rules of how machine learning operates prevents an easy interpretation by humans.

This problem is most pronounced in ANNs due to the multitude of non-linear interactions between network layers.

Moreover, some model types, notably ANNs, are known to produce unexpected results or errors from previously known input data with some, apparently irrelevant modifications that might be undetectable by human observers (“adversarial examples”).

Whether a particular error is a one-off “bug” or evidence of a systemic failure might be impossible to decide with poorly interpretable machine learning methods.

This also generalizes AI models as potentially dangerous and, therefore, is considered a major problem for many applications.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers.

Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

A last caveat: The ethics of AI and robotics is a very young field within applied ethics, with significant dynamics, but few well-established issues and no authoritative overviews—though there is a promising outline.

So this article cannot merely reproduce what the community has achieved thus far but must propose an ordering where little order exists.

Let an ultra-intelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever.

Since the design of machines is one of these intellectual activities, an ultra-intelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion”, and the intelligence of man would be left far behind.

Thus the first ultra-intelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

The use of new technology raises concerns about the possibility that it will become a new source of inaccuracy and data breaches.

In the high-risk area of healthcare, mistakes can have severe consequences for the patient who is the victim of this error. This is critical to remember since patients come into contact with clinicians at times in their lives when they are most vulnerable.

If harnessed effectively, such AI-clinician cooperation can be effective, wherein AI is used to offer evidence-based management and provides a medical decision guide to the clinician (AI-Health).

It can provide healthcare offerings in diagnosis, drug discovery, epidemiology, personalized care, and operational efficiency.

However, as Ngiam and Khor point out if AI solutions are to be integrated into medical practice, a sound governance framework is required to protect humans from harm, including harm resulting from unethical behavior (6–17).

Ethical standards in remedy may be traced lower back to the ones of the health practitioner Hippocrates, on which the idea of the Hippocratic Oath is rooted (18–24).

AI and machine learning refers to computer-based techniques for making decisions that require human-like reasoning about observed data.

Historically, AI technologies were based on explicit rules and logic trying to simulate what was perceived to be the thought processes of human experts.

However, many prognostic questions in medicine are “black box” problems with an unknown number of interacting processes and parameters. Applying a restricted number of rules does not match the complexity of these cases and, therefore, cannot provide a sufficiently accurate prognostication.

New types of AI are based on machine learning and are better suited to this task. They include artificial neural networks (ANNs), random forest techniques, and support vector machines.

The main aspect of machine learning is that the specific parameters for an underlying model architecture, such as synaptic weights in ANNs, are not determined interactively by humans.

Instead, they are learned using general-purpose algorithms to obtain a desired output in response to specific input data.

The structural characteristics of a particular method, such as the layered architecture of an ANN, together with the associated set of fitted parameters constitutes a model that can be used for making predictions for new data inputs.

Adapting the architecture of a particular AI technology to specific types of input data can enhance predictive performance.

For example, “recurrent” ANNs, such as long short-term memory, are constructed in a way to improve sequential data processing to capture temporal dependencies.

Of note, there has not yet been an algorithm developed to determine which type and architecture of the AI model would be optimal for a specific task.

For each particular model architecture, there are many different and equally good ways of learning from the same data sample. The machine learning algorithms usually do not recognize a single best combination of model parameters if such an optimum exists at all.

They can infer one or several plausible parameter sets to explain observed data presented during the learning phase.

This technology, therefore, is considered data-driven. It can detect emergent patterns in datasets, but not necessarily causal links.

Datasets in machine learning AI models for prognostication are based on the input of static or dynamic data, i.e., time series, or a combination thereof.

Accumulation of data over time to document trends can enhance prediction accuracy. Of great importance is the processing of heterogeneous data from electronic health records.

Due to the dependency of machine learning on the properties of datasets for training, issues of data quality and stewardship are becoming crucial.

In addition to the availability and usability, the reliability of data is an important topic.

It encompasses integrity, accuracy, consistency, completeness, and suitability of datasets.

Useful output data for prognostication are either numbers representing probabilities of future events, time intervals until these events, or future trajectories of clinical or functional parameters.

In addition to predictions of death vs. survival, prognosticating quality of life trajectories is now becoming more important for guiding decision-making, notably in the elderly.

Although the general concept of quality of life is difficult to operationalize, there are readily observable markers, such as the ability to perform activities of daily living, frailty, or cognitive capacity, which may serve as surrogates.

Specific problems of machine learning both the structural characteristics of the models as well as the machine learning process itself make these new AI technologies different from previous approaches to prognostication.

The lack of explicit rules of how machine learning operates prevents an easy interpretation by humans. This problem is most pronounced in ANNs due to the multitude of non-linear interactions between network layers.

Moreover, some model types, notably ANNs, are known to produce unexpected results or errors from previously known input data with some, apparently irrelevant modifications that might be undetectable by human observers (“adversarial examples”).

Whether a particular error is a one-off “bug” or evidence of a systemic failure might be impossible to decide with poorly interpretable machine learning methods.

This also generalizes AI models as potentially dangerous and, therefore, is considered a major problem for many applications.

The learning process itself can be compromised by over- and underfitting models to the specific characteristics of training data. Underfitting models already fail to account for the variability of training data.

Overfitting leads to good performance with training data, but can eventually harm the robustness of the model in future real-world applications with some distributional shifts of input data, e.g., due to variable practice patterns in different countries.

The current lack of explicability of many AI techniques is going to restrict their use to adjunct, e.g., decision-support, systems for a while.

After conclusive evidence for their overall effectiveness and beneficence will become available, these new techniques will most likely turn into perceived standards.

They will gain professional acceptance and diverting from them will require justification.

Importantly, values in society evolve. Thus, continuous monitoring of AI model performance and patients’ outcomes should become mandatory to calibrate these measures against ethical standards.

AISs, like IBM’s Watson for oncology, are meant to support clinical users and hence directly influence clinical decision-making. The AIS would then evaluate the information and recommend the patient’s care.

The use of AI to assist clinicians in the future could change clinical decision-making and, if adopted, create new stakeholder dynamics.

The future scenario of employing AIS to help clinicians could revolutionize clinical decision-making and, if embraced, create a new healthcare paradigm.

Clinicians (including doctors, nurses, and other health professionals) have a stake in the safe roll-out of new technologies in the clinical setting.

Humans depend on technology. We always have, ever since we have been “human;” our technological dependency is almost what defines us as a species.

What used to be just rocks, sticks, and fur clothes has now become much more complex and fragile, however. Losing electricity or cell connectivity can be a serious problem, psychologically or even medically (if there is an emergency). And there is no dependence like intelligence dependence.

If we turn over our decision-making capacities to machines, we will become less experienced at making decisions. For example, this is a well-known phenomenon among airline pilots: the autopilot can do everything about flying an airplane, from take-off to landing, but pilots intentionally choose to manually control the aircraft at crucial times (e.g., take-off and landing) to maintain their piloting skills.

An autonomous decision by the patient or a surrogate decision-maker requires a sufficient understanding of the relevant medical information as well as of the decision-making process within the medical community, such as adherence to guidelines.

The latter condition enables a dynamic dialog, i.e., shared decision-making, during the often unpredictable course of critical diseases. However, it is unrealistic to assume that these conditions can be fulfilled in every case.

Hence, the trust between patients, surrogates, and physicians still is a major pillar of decision-making in intensive care.

Traditionally, the burden of ethical decision-making is put onto the medical staff who must guarantee that the patient or his/her surrogate decides to the best of his/her capacity.

By being the gatekeeper for information, the medical professional—regardless if that means humans or any future implementation of AI—acts as the guardian of the patient’s autonomy.

Moreover, it is crucial to take the belief system and expectations of the patient or his/her surrogate into account to prevent a return to the paternalistic medicine of the past.

That type of medicine was mostly based on calculations of non-maleficence and beneficence by physicians. Empirical studies, however, indicated that the trust of patients in the prognostication accuracy of physicians is rather low.

This finding is especially important when discussing irreversible decisions, such as withdrawing treatment after ranking quality of life higher than extending life at any cost. Patient-centered outcomes rarely are binary and can involve a broad range of expectations related to the self-perceived quality of life.

Regarding AI models for prognostication, this fact requires more consideration for both the training samples for machine learning as well as for defining the types of output.

Robotics and AI can thus be seen as covering two overlapping sets of systems: systems that are only AI, systems that are only robotics, and systems that are both. We are interested in all three; the scope of this article is thus not only the intersection but the union, of both sets.

AI ethics is a set of moral principles to guide and inform the development and use of artificial intelligence technologies. Because AI does things that would normally require human intelligence, it requires moral guidelines as much as human decision-making. Without ethical AI regulations, the potential for using this technology to perpetuate misconduct is high.

In addition to the four principles of medical ethics, recent guidelines for an ethical AI also dealt with the issue of explicability, i.e., transparency of models in producing outputs based on specific inputs.

Of note, the absence of insight into the mechanisms of data processing by AI models is not fundamentally different from the opacity of human thinking.

Without critical reflection, algorithmic tools, such as conventional prognostication scores, are handled by intensivists like “black boxes”.

However, humans can be requested to reason and justify their conclusions if there is a lack of certainty or trust. In contrast, there has not been a design framework yet in place that creates AI systems supporting a similar relationship.

Current research into illustrating the models’ decision-making process in more transparent ways aims at distilling ANNs into graphs for interpretative purposes, such as decision trees, defining decision boundaries, approximating model predictions locally with interpretable models, or analyzing the specific impact of individual parameters on predictions.

Full explicability may not always be possible and other measures to audit outputs need to be implemented to assure that the principles of medical ethics are respected.

Trust in the working of AI algorithms and the ability to interact with them would enhance the patients’ confidence which is required for shared decision-making.

Moreover, model transparency—as far as this might be achieved—also helps to clarify questions of moral and legal accountability in case of mistakes.

When I talk about this topic with any group of students, I discover that all of them are “addicted” to one app or another. It may not be a clinical addiction, but that is the way that the students define it, and they know they are being exploited and harmed.

This is something that app makers need to stop doing: AI should not be designed to intentionally exploit vulnerabilities in human psychology.

AI provides a difficult set of ethical questions for society as well. One question centers on the preservation of the workforce. In the accounting profession, for example, AI can extract data from thousands of lease contracts to enable faster implementation of new lease accounting standards.

This is well illustrated by how much social media influenced the spread of fake news during the 2016 election, putting Facebook in the spotlight of ethical AI. A 2017 study by NYU and Stanford researchers shows that the most popular fake news stories on Facebook were shared more often than the most popular mainstream news stories.

The fact that this misinformation was able to spread without regulation from Facebook, potentially affecting the results of something as important as a presidential election, is extremely disturbing.

Evidence suggests that AI models can embed and deploy human and social biases at scale.

However, it is the underlying data than the algorithm itself that is to be held responsible. Models can be trained on data that contains human decisions or on data that reflects the second-order effects of social or historical inequities.

Additionally, the way data is collected and used can also contribute to bias and user-generated data can act as a feedback loop, causing bias.

To our knowledge, there are no guidelines or set standards to report and compare these models, but future work should involve this to guide researchers and clinicians.

But the most complicated queries still require a human agent’s intervention. AI-powered automation may be limited in some ways, but the impact can be huge. AI-powered virtual agents reduce customer service fees by up to 30%, and chatbots can handle up to 80% of routine tasks and customer questions.

Google, for example, has developed Artificial Intelligence Principles that form an ethical charter that guides the development and use of artificial intelligence in their research and products.

And not only did Microsoft create Responsible AI Principles that they put into practice to guide all AI innovation at Microsoft, but they also created an AI business school to help other companies create their own AI support policies.

The policy in this field has its ups and downs: Civil liberties and the protection of individual rights are under intense pressure from businesses’ lobbying, secret services, and other state agencies that depend on surveillance.

Privacy protection has diminished massively compared to the pre-digital age when communication was based on letters, analog telephone communications, and personal conversation and when surveillance operated under significant legal constraints.

As an additional point, in general, the more powerful someone or something is, the more transparent it ought to be, while the weaker someone is, the more right to privacy he or she should have. Therefore the idea that powerful AIs might be intrinsically opaque is disconcerting.

Bias in the Use of AI Evidence suggests that AI models can embed and deploy human and social biases at scale. However, it is the underlying data than the algorithm itself that is to be held responsible.

Models can be trained on data that contains human decisions or on data that reflects the second-order effects of social or historical inequities. Additionally, the way data is collected and used can also contribute to bias and user-generated data can act as a feedback loop, causing bias.

To our knowledge, there are no guidelines or set standards to report and compare these models, but future work should involve this to guide researchers and clinicians (36, 37).AI is moving beyond “nice-to-have” to becoming an essential part of modern digital systems.

As we rely more and more on AI for decision-making, it becomes essential to ensure that they are made ethically and free from unjust biases. We see a need for Responsible AI systems that are transparent, explainable, and accountable. AI systems increase in use for improving patient pathways and surgical outcomes, thereby outperforming humans in some fields.

It is likely to meager, co-exist or replace current systems, starting the healthcare age of artificial intelligence and not using AI is possibly unscientific and unethical.

Useful output data for prognostication are either numbers representing probabilities of future events, time intervals until these events, or future trajectories of clinical or functional parameters.

In addition to predictions of death vs. survival, prognosticating quality of life trajectories is now becoming more important for guiding decision-making, notably in the elderly.

Although the general concept of quality of life is difficult to operationalize, there are readily observable markers, such as the ability to perform activities of daily living, frailty, or cognitive capacity, which may serve as surrogates.

The ethics of AI and robotics has seen significant press coverage in recent years, which supports related research, but also may end up undermining it: the press often talks as if the issues under discussion were just predictions of what future technology will bring, and as though we already know what would be most ethical and how to achieve that. Press coverage thus focuses on risk, security in the Other Internet Resources section below, hereafter ), and prediction of impact (e.g., on the job market).

The result is a discussion of essentially technical problems that focus on how to achieve a desired outcome. Current discussions in policy and industry are also motivated by image and public relations, where the label “ethical” is not much more than the new “green”, perhaps used for “ethics washing”.

For a problem to qualify as a problem for AI ethics would require that we do not readily know what the right thing to do is. In this sense, job loss, theft, or killing with AI is not a problem in ethics, but whether these are permissible under certain circumstances is a problem. This article focuses on the genuine problems of ethics where we do not readily know what the answers are.

In the EU, some of these issues have been taken into account with (Regulation (EU) 2016/679), which foresees that consumers, when faced with a decision based on data processing, will have a legal “right to explanation”—how far this goes and to what extent it can be enforced is disputed that there may be a double standard here, where we demand a high level of explanation for machine-based decisions despite humans sometimes not reaching that standard themselves.

“The key piece of advice here is to curate the data that is used as input to a system to ensure the signals in the data support the training objectives. For example, if you are creating an AI to automate driving a car, you want your AI to learn from good drivers and not from bad drivers,” Grosset said.

AI is going to be increasingly used in healthcare and hence needs to be morally accountable. Data bias needs to be avoided by using appropriate algorithms based on unbiased real-time data.

Diverse and inclusive programming groups and frequent audits of the algorithm, including its implementation in a system, need to be carried out. While AI may not be able to completely replace clinical judgment, it can help clinicians make better decisions.

If there is a lack of medical competence in a context with limited resources, AI could be utilized to conduct screening and evaluation. In contrast to human decision-making, all AI judgments, even the quickest, are systematic since algorithms are involved.

As a result, even if activities don’t have legal repercussions (because efficient legal frameworks haven’t been developed yet), they always lead to accountability, not by the machine, but by the people who built it and the people who utilize it.

While there are moral dilemmas in the use of AI, it is likely to meager, co-exist or replace current systems, starting the healthcare age of artificial intelligence, and not using AI is also possibly unscientific and unethical.

Machine learning models require enormous amounts of energy to train, so much energy that the costs can run into tens of millions of dollars or more. Needless to say, if this energy is coming from fossil fuels, this is a large negative impact on climate change, not to mention being harmful at other points in the hydrocarbon supply chain.

The ethical issues of AI in surveillance go beyond the mere accumulation of data and direction of attention: They include the use of information to manipulate behavior, online and offline, in a way that undermines autonomous rational choice.

Of course, efforts to manipulate behavior are ancient, but they may gain a new quality when they use AI systems. Given users’ intense interaction with data systems and the deep knowledge about individuals this provides, they are vulnerable to “nudges”, manipulation, and deception. With sufficient prior data, algorithms can be used to target individuals or small groups with just the kind of input that is likely to influence these particular individuals.

A ’nudge‘ changes the environment such that it influences behavior in a predictable way that is positive for the individual, but easy and cheap to avoid (Thaler & Sunstein 2008). There is a slippery slope from here to paternalism and manipulation.

There are a growing number of recommendations and guidelines dealing with the issue of ethical AI.

The European Commission has recently published guidelines for ethical and trustworthy AI. According to these guidelines, special attention should be focused on situations involving vulnerable people and asymmetries of information or power.

In addition to adhering to laws and regulations and being technically robust, AI must be grounded in fundamental rights, societal values, and the ethical principles of explicability, prevention of harm, fairness, and human autonomy.

These principles echo the prima facie principles of medical ethics, beneficence, non-maleficence, justice, and human autonomy, which are aimed at protecting vulnerable patients in the context of uncertainty and social hierarchies.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

In conclusion, new AI and machine learning techniques have the potential to improve prognostication in intensive care. However, they require further refinement before they can be introduced into daily practice.

This encompasses technical problems, such as uncertainty quantification, the inclusion of more patient-centered outcome measures, and important ethical issues notably regarding hidden biases as well as the transparency of data processing and the explainability of results.

Thereafter, AI models may become a valuable component of the intensive care team.

Amid this uncertainty about the status of our creations, what we will know is that we humans have moral characters and that, to follow an inexact quote of Aristotle, “we become what we repeatedly do”.

So we ought not to treat AIs and robots badly, or we might be habituating ourselves towards having flawed characters, regardless of the moral status of the artificial beings we are interacting with.

In other words, no matter the status of AIs and robots, for the sake of our moral characters we ought to treat them well, or at least not abuse them.

Prognosticating the course of diseases to inform decision-making is a key component of intensive care medicine.

For several applications in medicine, new methods from the field of artificial intelligence (AI) and machine learning have already outperformed conventional prediction models. Due to their technical characteristics, these methods will present new ethical challenges to the intensivist.

In addition to the standards of data stewardship in medicine, the selection of datasets and algorithms to create AI prognostication models must involve extensive scrutiny to avoid biases and, consequently, injustice against individuals or groups of patients.

Assessment of these models for compliance with the ethical principles of beneficence and non-maleficence should also include quantification of predictive uncertainty.

Respect for patients’ autonomy during decision-making requires transparency of the data processing by AI models to explain the predictions derived from these models. Moreover, a system of continuous oversight can help to maintain public trust in this technology.

Based on these considerations as well as recent guidelines, we propose a pathway to an ethical implementation of AI-based prognostication.

It includes a checklist for new AI models that deals with medical and technical topics as well as patient- and system-centered issues.

AI models for prognostication will become valuable tools in intensive care.

However, they require technical refinement and careful implementation according to the standards of medical ethics.

Bibliography

Ethical considerations about artificial intelligence for prognostication … 1970, Viewed 24 April 2023, .

Ethical implications of artificial intelligence 1970, Viewed 24 April 2023, .

Ethics of Artificial Intelligence and Robotics (Stanford Encyclopedia … 1970, Viewed 24 April 2023, .

Global AI Ethics: A Review of the Social Impacts and Ethical … 1970, Viewed 24 April 2023, .

Jaakko Pasanen 1970, AI Ethics Are a Concern. Learn How You Can Stay Ethical, Viewed 24 April 2023, .

Legal and Ethical Consideration in Artificial Intelligence in … 1970, Viewed 24 April 2023, .

Santa Clara University 1970, Artificial Intelligence and Ethics: Sixteen Challenges and Opportunities, Viewed 24 April 2023, .

Robot Playing Chess with a Human

Robots have advanced and the technology is growing.

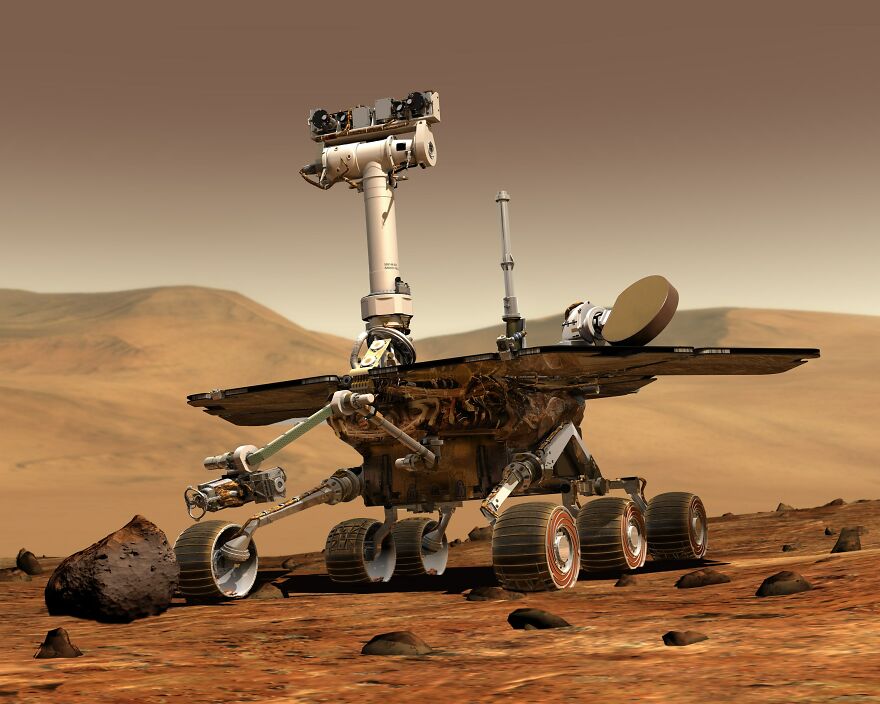

Space Explorer Robot

A robot in space.

Emotional Characteristics of Robots

Robots can be trained to have emotions like Humans.

-1

0