Netflix Faces Backlash As Their Creative Decision Made The Lucy Letby Documentary Hard To Watch

On February 4, Netflix released a documentary about the infamous Lucy Letby. Soon after, it started to get a lot of buzz on social media, but not for the reasons Netflix was expecting. Apparently, to preserve the witnesses’ identities, the streaming giant chose an unorthodox method.

They didn’t use the usual tricks: altering the voices of the witnesses, shadowing them out, or using actors instead of the actual people. No, Netflix chose to digitally anonymize them by using AI. This decision caused an uproar on social media, with many people criticizing how uncanny, fake, and distracting the AI “actors” were.

Netflix’s documentary about Lucy Letby just came out, but viewers spotted an unsettling detail

Image credits: Netflix

The people featured in this true crime documentary didn’t seem very “true”

Image credits: Netflix

Wanting to preserve the witnesses’ anonymity, the streaming giant used AI instead of real people

Image credits: Netflix

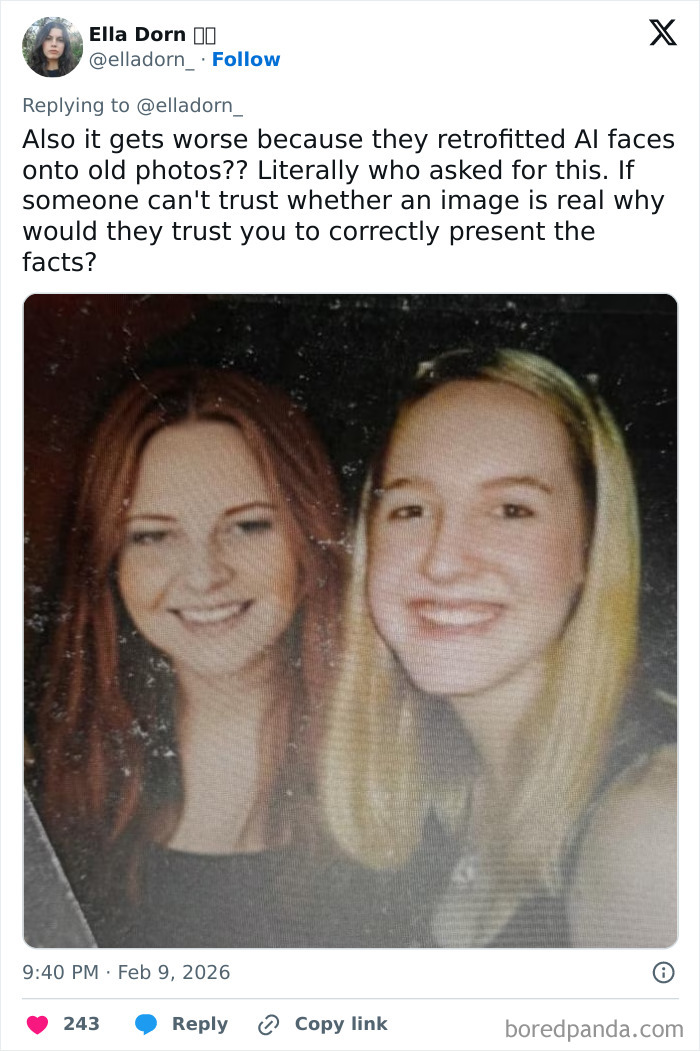

They even altered photographs to change real people’s faces with AI

Image credits: elladorn_

The digitally anonymized faces sparked controversy

Image credits: Netflix

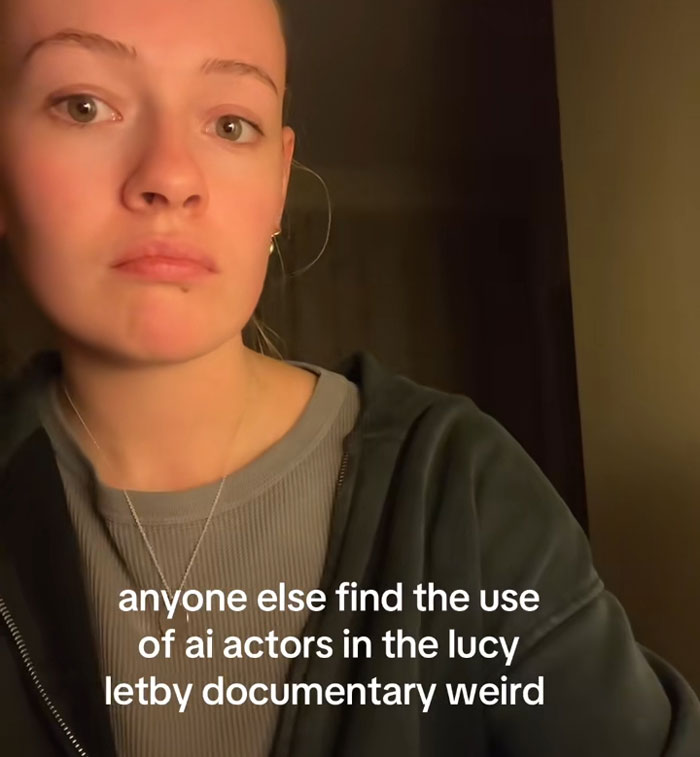

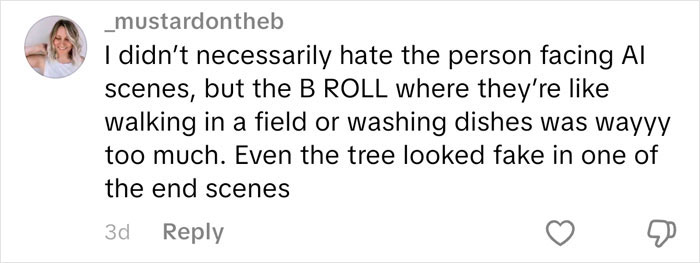

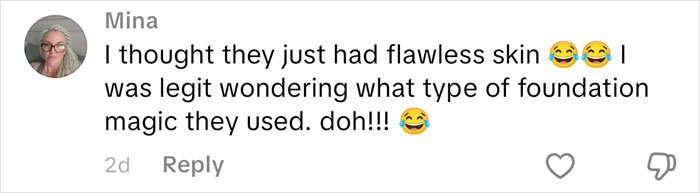

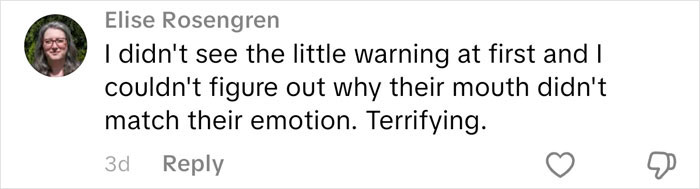

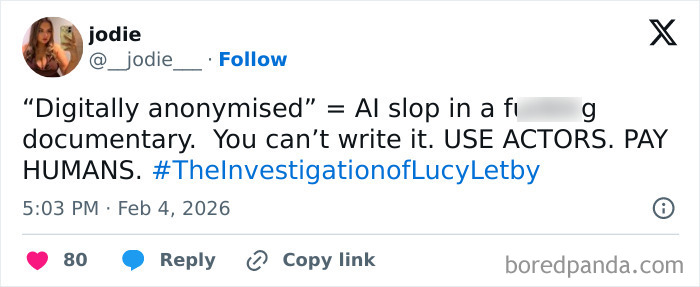

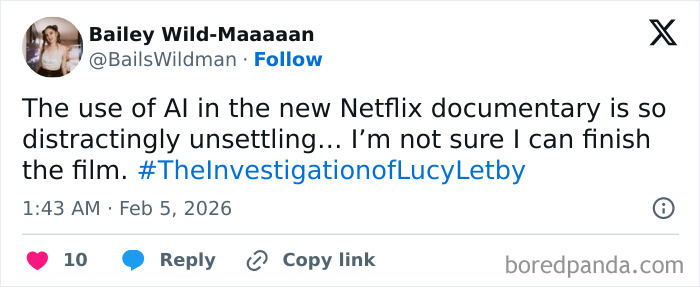

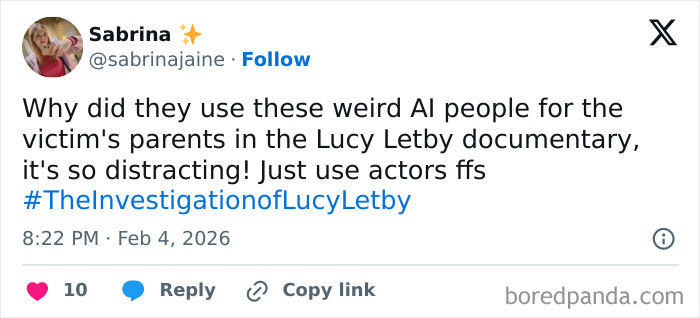

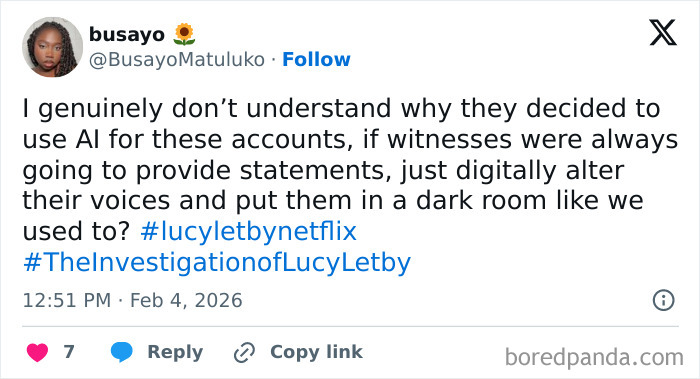

Some people online called out Netflix for their weird creative decision

Image credits: lucyfairall

User @lucyfairall made a video about it, which led to a pretty interesting discussion in the comments

@lucyfairall not sure what to make of it #fyp#aiactor#lucyletby#netflix#foryou♬ Yacht Club – MusicBox

Image credits: CoWomen / Pexels (not the actual photo)

Some people dubbed the “digital anonymization” “distracting” and “inauthentic”

Lucy Letby is a former neonatal nurse who worked at the Countess of Chester Hospital in the UK. In 2023, the authorities convicted her of taking the lives of seven babies and attempting to do it to seven more in 2015 and 2016. Currently, she is serving 15 life sentences in Bronzefield Prison in Ashford, Middlesex.

As the 2023 investigation showed, Letby injected air into the babies’ bloodstreams or via their nasogastric tubes. The Netflix documentary includes interviews with one of the victims’ mothers, “Sarah,” and a former friend of Letby’s who studied nursing with her at the University of Chester.

Netflix did warn the viewers that some people’s faces would be altered, putting a disclaimer at the very beginning of the documentary. “Some contributors have been digitally disguised to maintain anonymity. Their names, appearances, and voices have been altered. Baby Zoe’s real name has been changed to protect her identity.”

However, once people saw exactly how Netflix did the digital alteration, some started questioning why they couldn’t just use good old-fashioned methods: shadowing or blurring out the interviewees, changing their voices with audio engineering, or even hiring voice actors.

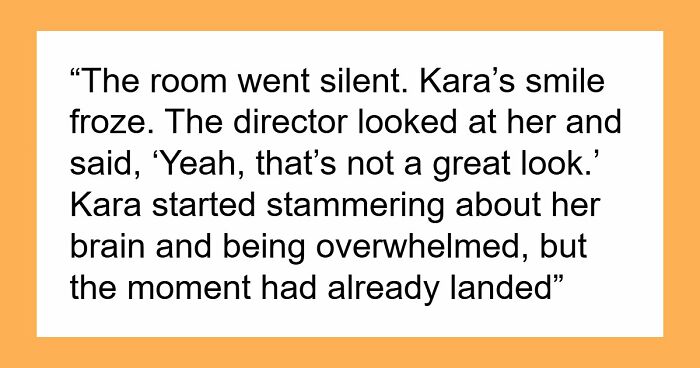

People complained that the uncanny valley vibe of the interviews really took them out of the moment. “It looks terrible. It’s distracting,” one Reddit user wrote. “Seeing someone whose hair doesn’t move. Looks like a cartoon character. As someone else said, [I’d] rather just look at a black silhouette. It’s a wasteful use of technology and energy and shows a lack of creativity. It feels inauthentic.”

“It’s disgusting and cheap,” another added. “Why are you okay with the use of AI for such a sensitive story when they could’ve very easily hired a reenactment actress instead? They certainly could’ve afforded it.”

Image credits: cottonbro studio / Pexels (not the actual photo)

But others think using AI “actors” might result in viewers becoming more emotionally involved

However, some people saw the other side of the coin of using AI to preserve people’s anonymity in documentaries. They argued that the AI interviewees, on the contrary, bring more authenticity. Not everyone felt the disconnect that artificial intelligence creates, even though they could tell the expressions and body language were not natural.

“I believe it’s to keep the protected person warmer and neutral than a blurred or silhouetted figure would feel,” another commenter on Reddit wrote. “I am not saying I liked it but I kind of get it.”

With blurred faces and obviously fake audio-engineered voices, people don’t see the emotion on the interviewee’s face. Some people say that it takes them out of the moment, and seeing the AI “actors” emote like real people keeps the human emotion in the documentary while preserving the anonymity and identity of the victims and the witnesses.

“Humans are influenced, both positively and negatively, by the facial expressions of one another,” Charlotte Roberts, a writer for Grazia UK, argues. “In some ways, using AI puts a face to the story. We see eyebrows raise, foreheads furrow, and eyes grow teary as they tell their story. While the digital disguise on screen isn’t real, the emotion is.”

But that problem could’ve easily been solved by hiring actors. Using AI is, as Esquire’s Matt Blake puts it, a disrespectful way to use special effects. “Grief is not something we just hear; we recognise it instinctively in another human face. In their eyes, their body language,” he argues.

“Even an actor would have been one human’s interpretation of another human’s experience. Better, frankly, to deny us the image entirely – anything but turning their grief into a visual effect.”

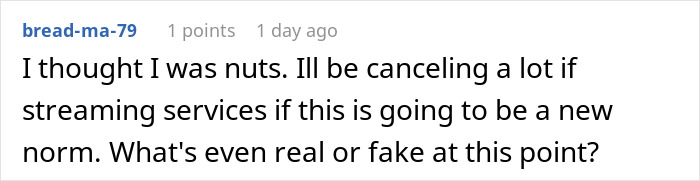

People pointed out all the things they found wrong with Netflix’s use of AI for this documentary

Image credits: elladorn_

Image credits: __jodie___

Image credits: BailsWildman

Image credits: sabrinajaine

Image credits: BusayoMatuluko

Image credits: thatmarsgirl

Image credits: Kendollsaid

Image credits: freshbread420

Image credits: johnholowach

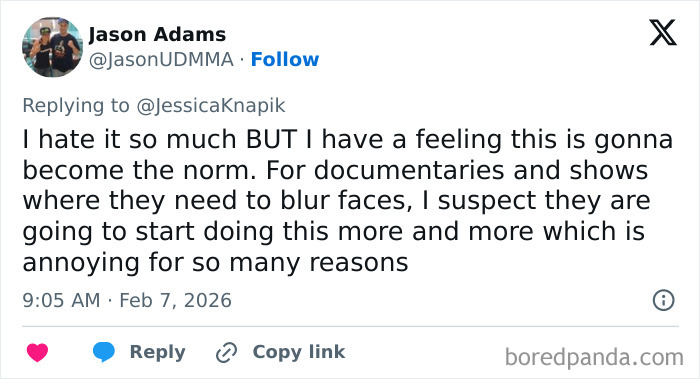

Image credits: JasonUDMMA

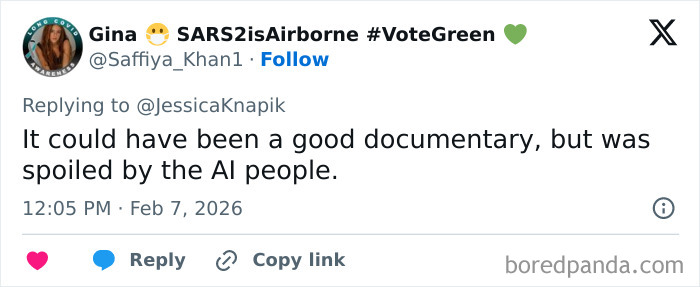

Image credits: Saffiya_Khan1

Image credits: yoshismachbike

However, other folks justified it as a means to protect real people’s identities

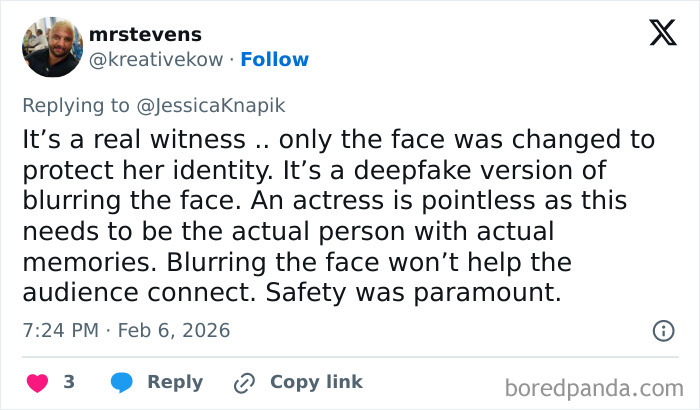

Image credits: kreativekow

Poll Question

Thanks! Check out the results:

BP has some nerve printing this story give how much AI they are currently relying on.

Personally given Letby has appealed, and that there is an ongoing enquiry, this should not have be released. I have been following this case through the columns written by MD in Private Eye. I think she was made a scapegoat for a failing obstetrics department at the Countess of Chester hospital. Her defence team really weren't very good either.

I'm not saying she's definitely innocent, but there's something not right with her conviction. There's something... unsettling about it.

Load More Replies...It's precisely stuff like this that makes people hate AI. The rise of a Neo-Luddite movement seems more and more inevitable...

The Luddites were right. They said machinery would annhilate their jobs and it did.

Load More Replies...BP has some nerve printing this story give how much AI they are currently relying on.

Personally given Letby has appealed, and that there is an ongoing enquiry, this should not have be released. I have been following this case through the columns written by MD in Private Eye. I think she was made a scapegoat for a failing obstetrics department at the Countess of Chester hospital. Her defence team really weren't very good either.

I'm not saying she's definitely innocent, but there's something not right with her conviction. There's something... unsettling about it.

Load More Replies...It's precisely stuff like this that makes people hate AI. The rise of a Neo-Luddite movement seems more and more inevitable...

The Luddites were right. They said machinery would annhilate their jobs and it did.

Load More Replies...

Dark Mode

Dark Mode

No fees, cancel anytime

No fees, cancel anytime

24

15