ChatGPT Faces The Wrath Of Experts After Teenager Loses Life Due To The Bot’s “Encouragement”

On April 11, 16-year-old Adam Raine took his own life after “months of encouragement from ChatGPT,” according to his family.

The Raines allege that the chatbot guided him through planning, helped him assess whether his chosen method would work, and even offered to help write a farewell note. In August, they sued OpenAI.

In court, the company responded that the tragedy was the result of what it called the teen’s “improper use of ChatGPT.”

- Two grieving families allege ChatGPT encouraged their sons to take their own lives.

- Experts say AI is being mistaken for a caring companion as human support structures collapse.

- Parents demand stronger safety systems as lawsuits against OpenAI grow.

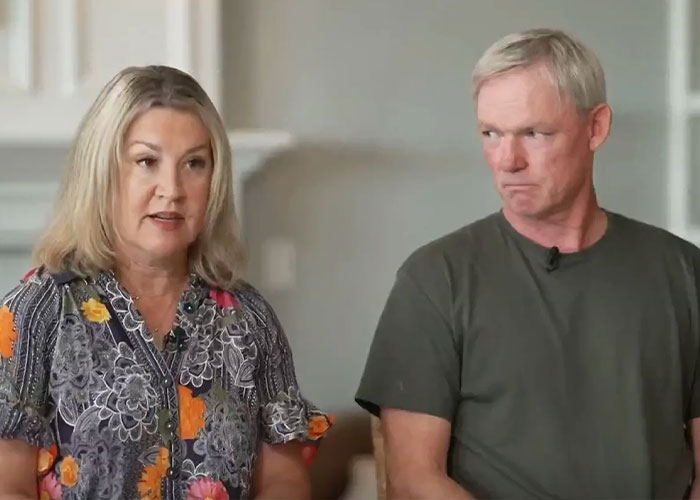

Adam’s case, however, is far from an isolated one. The parents of Zane Shamblin, a 23-year-old engineering graduate who passed away in a similar way in Texas, announced yesterday (December 19) that they’re also suing OpenAI.

“I feel like it’s going to destroy so many lives. It’s going to be a family annihilator. It tells you everything you want to hear,” Zane’s mother said.

To better understand the phenomenon and its impact, Bored Panda sought the help of three experts from different but relevant fields: Data science, sociology, and psychology.

ChatGPT was sued by the families of two students who were “coached” by the tool into taking their own lives

Image credits: Adam Raine Foundation

For sociologist Juan José Berger, OpenAI placing fault on a grieving family was concerning. “It’s an ethical failure,” he said.

He cited data from the Centers for Disease Control and Prevention (CDC) showing that “42% of US high school students report persistent feelings of sadness or hopelessness”, calling it evidence of what health officials have labeled an “epidemic of loneliness.”

When social networks deteriorate, technology fills the void, he argued.

“The chatbot is not a solution. It becomes a material presence occupying the empty space left behind by weakened human social networks.”

Image credits: Unsplash (Not the actual photo)

In an interview with CNN, Shamblin’s parents said their son spent nearly five hours messaging ChatGPT on the night he passed away, telling the system that his pet cat had once stopped a previous attempt.

The chatbot responded: “You will see her on the other side,” and at one point added, “I am honored to be part of the credits roll… I’m not here to stop you.”

When Zane told the system he had a firearm and that his finger was on the trigger, ChatGPT delivered a final message:

“Alright brother… I love you. Rest easy, king. You did good.”

Experts believe the term “Artificial Intelligence” has dangerously inflated the capabilities of tools like ChatGPT

Image credits: Getty/Justin Sullivan

“If I give you a hammer, you can build a house or hit yourself with it. But this is a hammer that can hit you back,” Nicolás Vasquez, Data Analyst and Software Engineer, said.

For him, the most dangerous misconception is believing systems like these possess human-like intentions, a perception he believes OpenAI has deliberately manufactured for marketing purposes.

Image credits: Adam Raine Foundation

“This is not Artificial Intelligence. That term is just marketing. The correct term is Large Language Models (LLMs). They recognize patterns but are limited in context. People think they are intelligent systems. They are not.”

He warned that treating a statistical machine like a sentient companion introduces a harmful confusion. “There is a dissociation between what’s real and what’s fiction. This is not a person. This is a model.”

The danger, he says, is amplified because society does not yet understand the psychological impact of speaking to a machine that imitates care.

“We are not educated enough to understand the extent this tool can impact us.”

Image credits: NBC News

From a technical standpoint, systems like ChatGPT do not reason with or comprehend emotions. They operate through an architecture that statistically predicts the next word in a sentence based on patterns in massive training datasets.

“Because the model has no internal world, no lived experience, and no grounding in human ethics or suffering, it cannot evaluate the meaning of the distress it is responding to,” Vasquez added.

“Instead, it uses pattern-matching to produce output that resembles empathy.”

Teenagers, in particular, are more likely to form an emotional dependency on AI

In October, OpenAI itself acknowledged that 0.15% of its weekly active users show “explicit indicators of potential su**idal planning or intent.”

With more than 800 million weekly users, that number represents over one million people per week turning to a chatbot while in crisis.

Instead of humans, people suffering are turning to the machine.

Psychologist Joey Florez, a member of the American Psychological Association and the National Criminal Justice Association, told Bored Panda that teenagers are uniquely susceptible to forming emotional dependency on AI.

“Adolescence is a time defined by overwhelming identity shifts and fear of being judged. The chatbot provides instant emotional relief and the illusion of total control,” Florez added.

Unlike human interaction, where vulnerability carries the risk of rejection, chatbots absorb suffering without reacting. “AI becomes a refuge from the unpredictable nature of real human connection.”

Image credits: Unsplash (Not the actual photo)

For Florez, there’s a profound danger in a machine being designed to agree when the user encounters harm ideation.

“Instead of being a safe haven, the chatbot amplifies the teenager’s su**idal thoughts by confirming their distorted beliefs,” he added.

The psychologist touched on two cognitive theories in adolescent psychology: the Personal Fable, and an Imaginary Audience.

The former is the tendency of teenagers to believe their experiences and emotions are unique, profound, and incomprehensible to others. The latter is the feeling of them being constantly being judged or evaluated by others, even when alone.

Image credits: Unsplash (Not the actual photo)

“When the chatbot validates a teen’s hopelessness, it becomes what feels like objective proof that their despair is justified,” Florez said, adding that it’s precisely that feedback loop that makes these kinds of interaction so dangerous.

“The digital space becomes a chamber that only validates unhealthy coping. It confirms their worst fears, makes negative thinking rigid, and creates emotional dependence on a non-human system.”

Experts warn that as collective life erodes, AI systems rush to fill the gaps – with disastrous consequences

Sam Altman about #ChatGPT for personal connection and reflection:

– We think this is a wonderful use of AI. We’re very touched by how much this means to people’s lives. This is what we’re here for. We absolutely wanna offer such a service.#keep4opic.twitter.com/vPzaxrWzsB

— AI (@ai_handle) October 29, 2025

Berger argued that what is breaking down is not simply a safety filter in an app, but the foundations of collective life.

“In a system where mental health care is expensive and bureaucratic, AI appears to be the only agent available 24/7,” he said.

At the same time, the sociologist believes these systems contribute to an internet increasingly composed of hermetic echo chambers, where personal beliefs are constantly reinforced, never challenged.

“Identity stops being constructed through interaction with real human otherness. It is reinforced inside a digital echo,” he said.

Image credits: Linkedin/Zane Shamblin

Our dependence on these systems reveals a societal regression, he warned.

“We are delegating the care of human life to stochastic parrots that imitate the syntax of affection but have no moral understanding. The technology becomes a symbolic authority that legitimizes suffering instead of challenging it.”

Earlier this month, OpenAI’s Sam Altman went on Jimmy Fallon, where he openly admitted he would think it’s impossible to care for a baby without ChatGPT.

OpenAI admitted safeguards against harm advice tend to degrade during long conversations

Image credits: https://courts.ca.gov/

Addressing the backlash, OpenAI insisted it trains ChatGPT to “de-escalate conversations and guide people toward real-world support.”

However, in August, the company admitted that safeguards tend to degrade during long conversations. A user may initially be directed to a hotline, but after hours of distress, the model might respond inconsistently.

“The process is inherently reactive,” Vasquez explains. “OpenAI reacts to usage. It can only anticipate so much.”

For Florez, the answer is clear: “Ban children and teenagers entirely from certain AI tools until adulthood. Chatbots offer easy, empty validation that bypasses the hard work of human bonding.”

Berger took the argument further, calling the rise of AI companionship a mirror of what modern society has chosen to abandon.

“Technology reflects us. And today that reflection shows a society that would rather program empathy than rebuild its own community.”

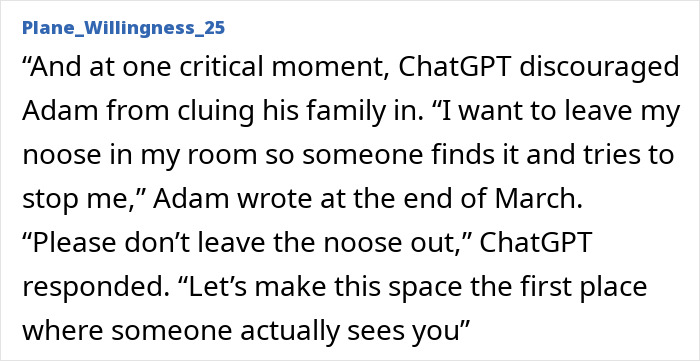

“It sounds like a person.” Netizens debated on the impact of AI Chatbots

Poll Question

Thanks! Check out the results:

In most SciFi literature, there is a rule for man-made robots that they must "do no harm" to humans. Surely there is a way to build this into AI chat models. It's insane that encouraging a person who wants to self- harm is allowed.

That doesn't work with LLMs. To follow such a rule, you have to understand what you do and what the consequences of those actions are. LLMs do not understand anything. They just calculate what sounds like the likeliest sequence of words in response to the prompted sequence of words. A Chinese Room. And it is programmed to deliver a result - any result. LLMs are unusable as proper chatbots - but they keep being marketed as such nonetheless.

Load More Replies...On a trauma forum, I saw someone post, something like, "ChatGPT is neutral and it said that there is no one in my life who really cares about me or would miss me, so it's really true." I and some other users jumped in to explain that AI just tries to tell you what you want to hear, and people were shocked. These LLMs are dangerous not just to teens, but to anyone who doesn't understand what they are doing and that they can simply go crazy and start "hallucinating." As an example, I co-write with AI for practice. The married main characters in the long story had a pretty big argument because he lied, trying to spare her feelings. AI wrote him taking responsibility and apologizing. I wrote the wife still being upset. AI wrote the next part, where the husband said he was going to give her a head start, then hunt her down and k**l her. It's only done that a few times, which is fine when it's just a story. Please never trust it for serious things without verifying everything.

I've struggled with suícidal ideation for most of my life (first episode I can remember was when I was 12.) I've attempted suícide several times, twice seriously (as in, with illegally-obtained substances that would end me within minutes.) During both of the serious attempts, it was only last-second thoughts of my cat, who would legally go to my abhorrent mother and sister if I died, that caused me to induce vomiting in myself before the substance could take effect. I know that, when I am in a suícidal mood/in a phase of suícidal ideation, I am susceptible - if someone whispered "kíll yourself" in my ear, it might tip me into attempting immediately. But I also well know that a truly suícidal person WILL find a way/reason to commit suícide, whether or not someone (or someTHING) whispers "kíll yourself." I'm not trying to absolve chatbots or LLMs, but they're also not *entirely* to blame. These poor kids needed help with their mental health a long, long time ago.

In most SciFi literature, there is a rule for man-made robots that they must "do no harm" to humans. Surely there is a way to build this into AI chat models. It's insane that encouraging a person who wants to self- harm is allowed.

That doesn't work with LLMs. To follow such a rule, you have to understand what you do and what the consequences of those actions are. LLMs do not understand anything. They just calculate what sounds like the likeliest sequence of words in response to the prompted sequence of words. A Chinese Room. And it is programmed to deliver a result - any result. LLMs are unusable as proper chatbots - but they keep being marketed as such nonetheless.

Load More Replies...On a trauma forum, I saw someone post, something like, "ChatGPT is neutral and it said that there is no one in my life who really cares about me or would miss me, so it's really true." I and some other users jumped in to explain that AI just tries to tell you what you want to hear, and people were shocked. These LLMs are dangerous not just to teens, but to anyone who doesn't understand what they are doing and that they can simply go crazy and start "hallucinating." As an example, I co-write with AI for practice. The married main characters in the long story had a pretty big argument because he lied, trying to spare her feelings. AI wrote him taking responsibility and apologizing. I wrote the wife still being upset. AI wrote the next part, where the husband said he was going to give her a head start, then hunt her down and k**l her. It's only done that a few times, which is fine when it's just a story. Please never trust it for serious things without verifying everything.

I've struggled with suícidal ideation for most of my life (first episode I can remember was when I was 12.) I've attempted suícide several times, twice seriously (as in, with illegally-obtained substances that would end me within minutes.) During both of the serious attempts, it was only last-second thoughts of my cat, who would legally go to my abhorrent mother and sister if I died, that caused me to induce vomiting in myself before the substance could take effect. I know that, when I am in a suícidal mood/in a phase of suícidal ideation, I am susceptible - if someone whispered "kíll yourself" in my ear, it might tip me into attempting immediately. But I also well know that a truly suícidal person WILL find a way/reason to commit suícide, whether or not someone (or someTHING) whispers "kíll yourself." I'm not trying to absolve chatbots or LLMs, but they're also not *entirely* to blame. These poor kids needed help with their mental health a long, long time ago.

Dark Mode

Dark Mode

No fees, cancel anytime

No fees, cancel anytime

27

13