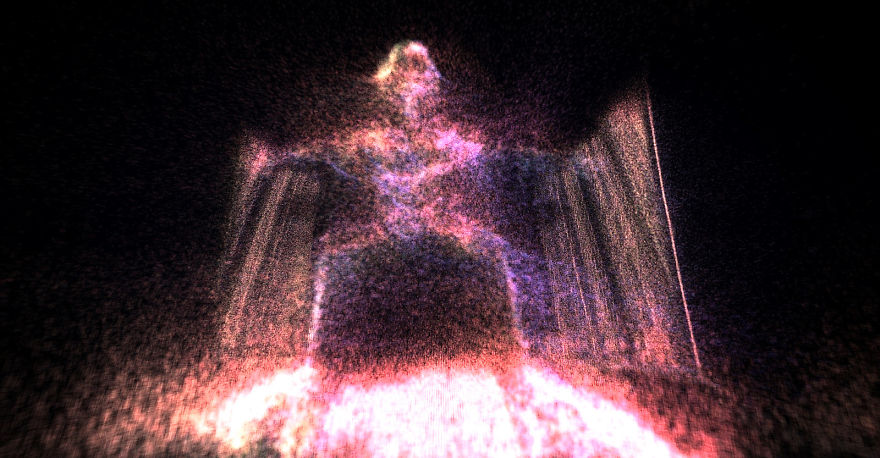

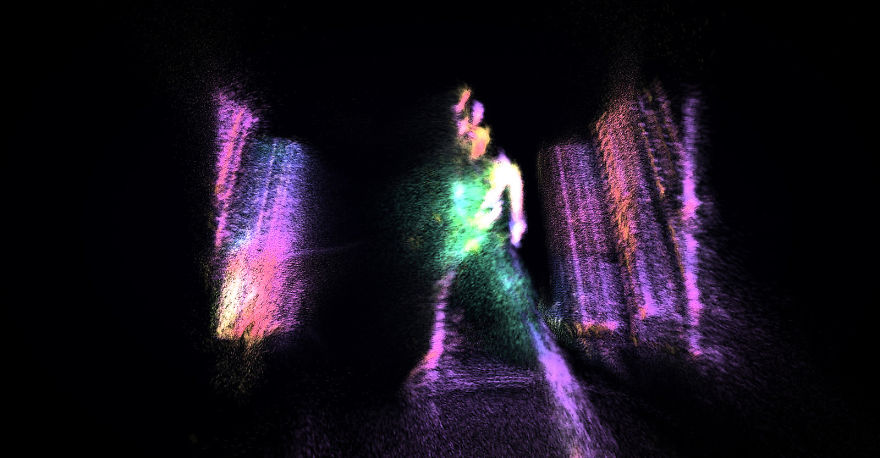

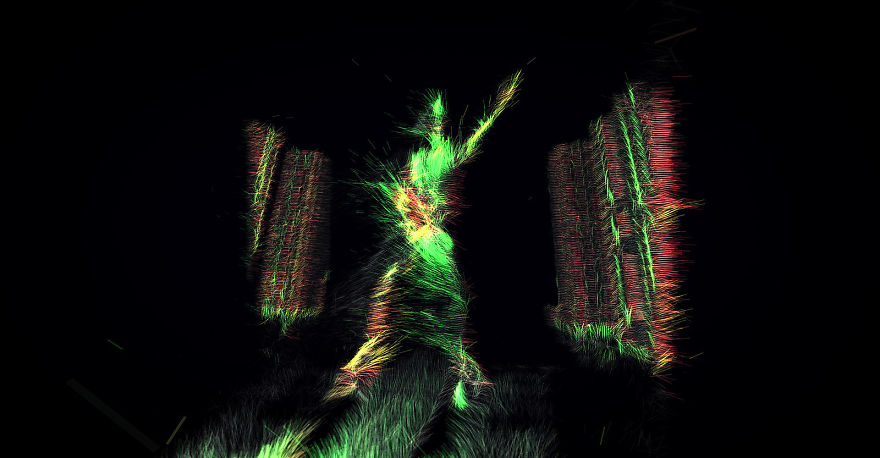

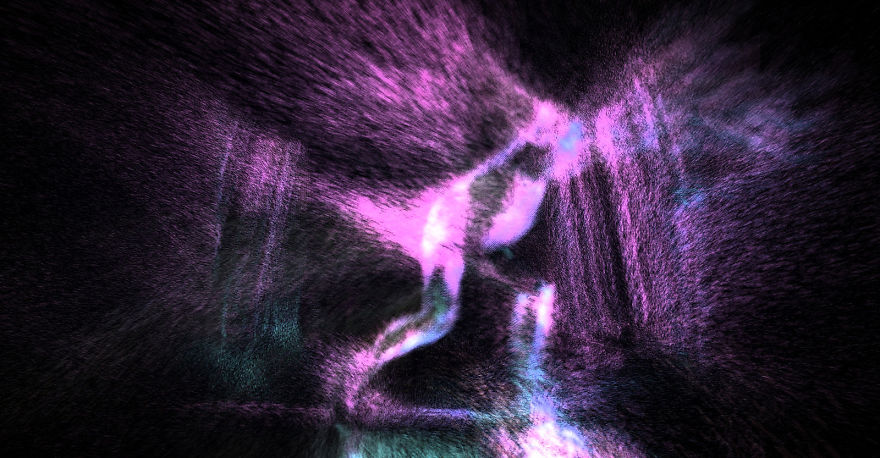

schnellebuntebilder and kling klang klong developed an interactive framework based on VVVV to create synaesthetic experiences of sounds and visuals generated from body movement through Kinect2 data. In their first collaborative work „MOMENTUM“ the user and his surroundings transforms into a fluid creature in an ever-transforming system of particles. A virtual reality, in which movement becomes sound and music and matter seems to dissolve.

See the video here: https://vimeo.com/112193826

The idea to create a real-time analytics tool for movements started at the Choreographic Coding Lab of Motion Bank in 2013 and was quickly set in motion.

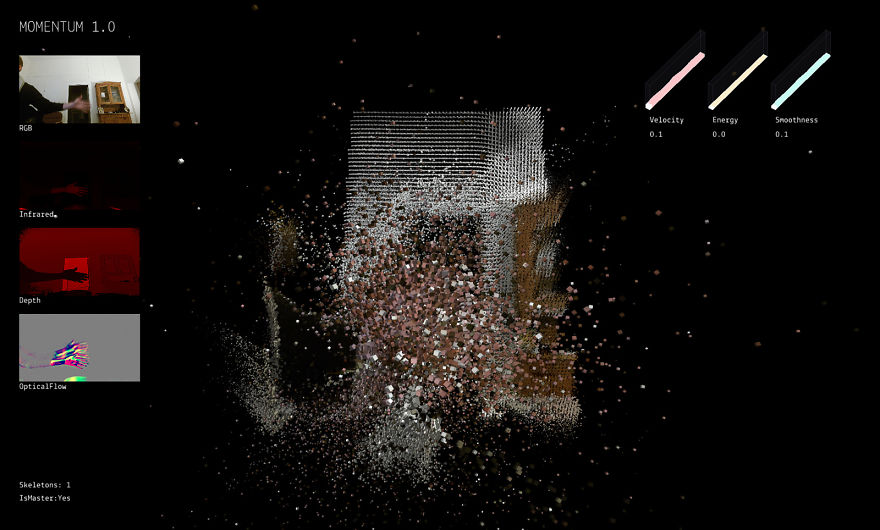

In Frankfurt we started to extract the “qualities of dance” by using all available Kinect-data (skeleton, depth, IR, microphone). The hereby established categories – including terms of velocity, smoothness, bounding-box, traveling, energy and so forth – form the foundation of a shared language or vocabulary between visual and sound artists as well as dancers. The project, which kling klang klong rendered into sounds, earned the working title “motionklong” due to its conjunctive character.

More info: vimeo.com

Source: vimeo.com

During the summer of 2014 schnellebuntebilder worked on the visual translation for the real-time movements. Each autonomous three-dimensional spot within the point-cloud is constantly emitting particles. Based on the intensity and direction of each move, the GPU-based system calculates and visualizes the trajectory of over 2 million particles. Simulations of physical forces like wind and gravity are added to the particle system to generate a variety of visual and sonic spheres for this collaborative artpiece: “MOMENTUM”.

Source: vimeo.com

Source: vimeo.com

Source: vimeo.com

Source: vimeo.com

The foundation for this shared vocabulary consists of two-dimensional and three-dimensional motion tracking data (e.g. skeleton, optical flow…), which run through a handmade custom programmed tool based on the vvvv software. Via an OSC-interface this evaluated output is delivered to kling klang klong’s soundframework “MOTIONKLONG”, which is based on the software Max. Here the data is transformed into generative sounds. It also controls the behaviour and sonic attributes of the adaptive music running in Ableton Live. In the same time millions of particles form the dimensional polygon sculpures and fleeding traces of light. In this way a sound-generating real-time particle system comes into existence to turn a dancers movement into audible and visible output.

Look behind the screens: https://vimeo.com/112223038

Source: vimeo.com

Source: vimeo.com

Source: vimeo.com

Source: vimeo.com

109views

Share on Facebook

4

0