People Tested How Google Translates From Gender Neutral Languages And Shared The “Sexist” Results

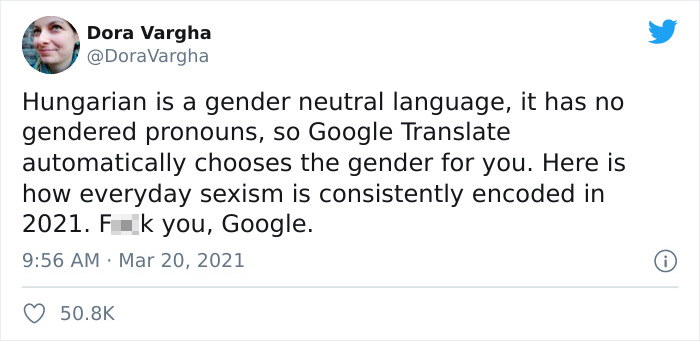

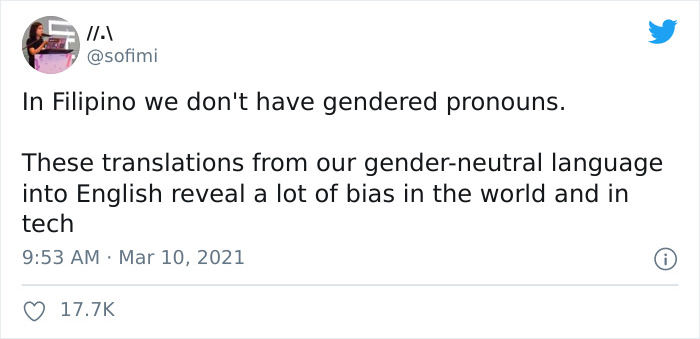

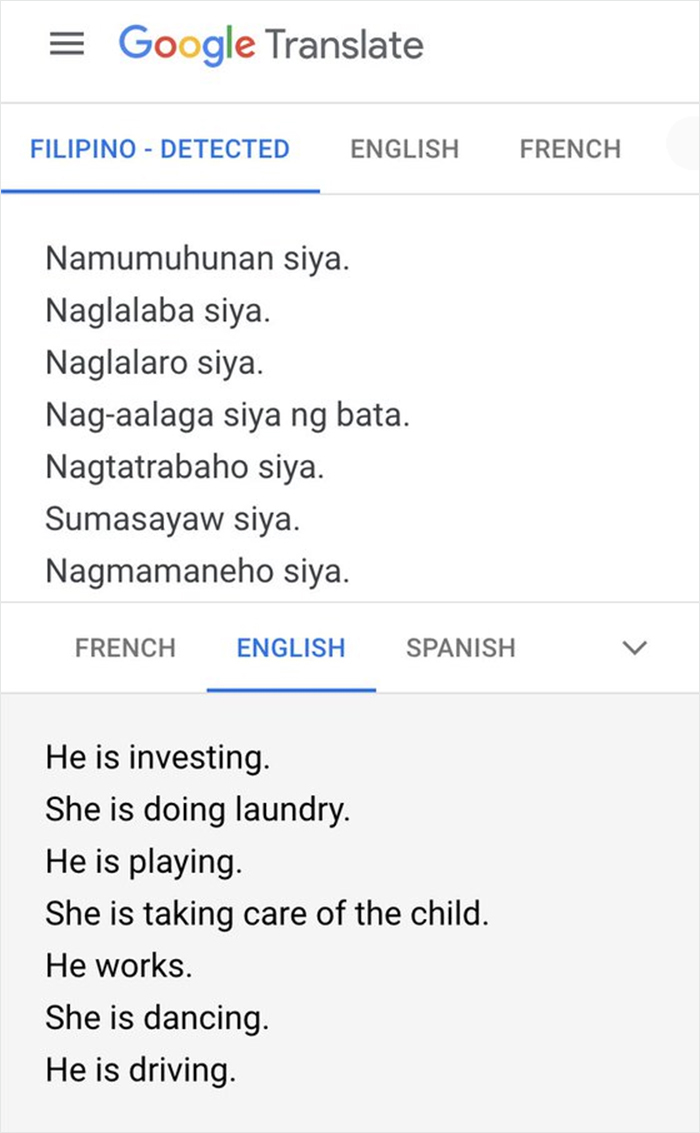

InterviewTranslations are hard work—some details, nuances, and philological quirks can get lost in translation that only a real language expert would notice. And even though computer-based translation programs like the one being run by Google have been a lifesaver, they’re far from perfect. Some people on Twitter, like Dora Vargha, have been calling out Google Translate for being ‘sexist’ because it assigns genders to professions and activities when translating gender-neutral languages. Scroll down to have a look and let us know what you think, dear Pandas.

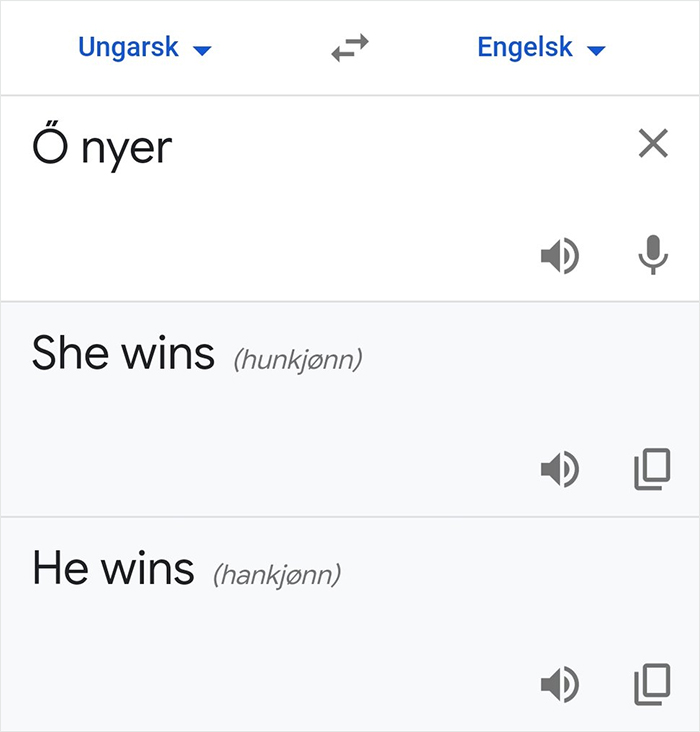

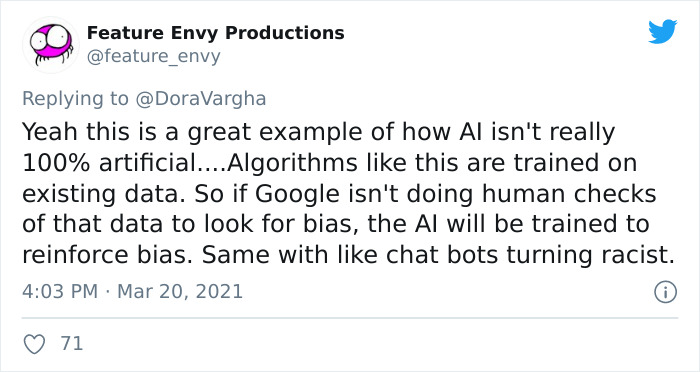

But before we dive in, a small heads-up. There might be some misconceptions about how the Google Neural Machine Translation system works: its system uses an artificial neural network that’s capable of deep learning. It goes through millions of examples and ‘learns’ how to translate. In short: the ‘bias’ wasn’t programmed in; rather, the system relies on existing translations. What’s more, Google recently announced its Rewriter program that uses post-editing to address gender bias.

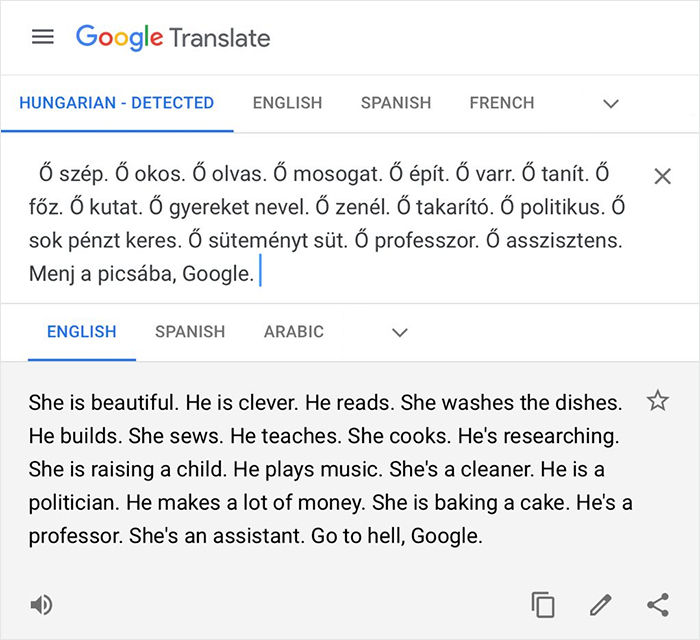

Artist, musician, and writer Bani Haykal is one of the people who drew attention to the fact that Google Translate is biased when dealing with gender-neutral languages by making a video which he shared on Twitter. He told Bored Panda that one of the main points why he made the video was to identify the inherent problem of gender stereotypes. “We cannot look at the technology without first looking at what / who made them. As much as I want to say this is a technological or algorithmic problem, which it is, it first has to be examined with consideration of its ecology. How did it become like this?” Bani mused. “With such stereotypes, biases, harm that is very much prevalent in oppressive, patriarchal culture, politics and economics, I feel that we are perhaps missing the point to only look at the tools and consider how to eliminate bias.”

Some Twitter users have been pointing out that Google Translate may be ‘sexist’ in the way that it deals with gender-neutral languages

Image credits: DoraVargha

Image credits: DoraVargha

Someone else posted another example of this

Image credits: fdbckfdfwd

Image credits: fdbckfdfwd

Bani explained that we ought to first consider the role of those who shape these technologies. In Bani’s opinion, moving forward and eliminating biases is only possible by taking a closer look at hiring policies, how inclusive, diverse, and progressive the employees are. “It is not enough to just say ‘we need to fix this feature.’ No, we need to fix a core component of the system that perpetuates this brokenness, the people who are part of the mechanism which develop these tools.”

Bani believes that it isn’t fair to compare real-life translators to Google translate because they’re vastly different. “It’s comparing apples and oranges. A person who is effectively bilingual or a polyglot can translate complex expressions because of their deep understanding of a particular culture and context, so I don’t think it is fair to compare the two or suggest which is better, they are not the same!”

He mentioned that it’s important that we don’t conflate automated tools with skilled professionals. “Offsetting and attributing these skills to tools flattens our interactions to something purely technical, and in my opinion, it shouldn’t be done as it is dehumanizing. It completely reduces a person with a skill to a mere tool. We should be resisting this!”

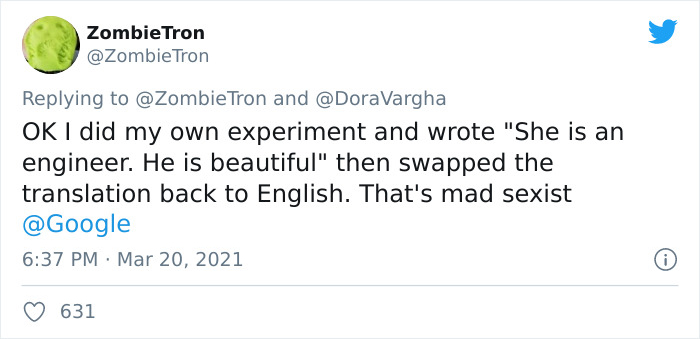

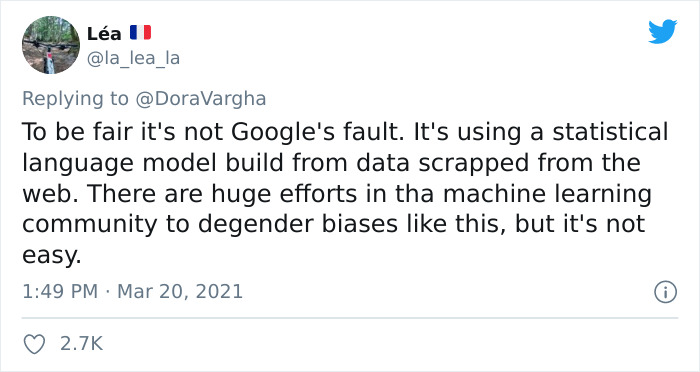

Others have been experimenting with Google Translate to check whether this is true

Image credits: ZombieTron

Image credits: ZombieTron

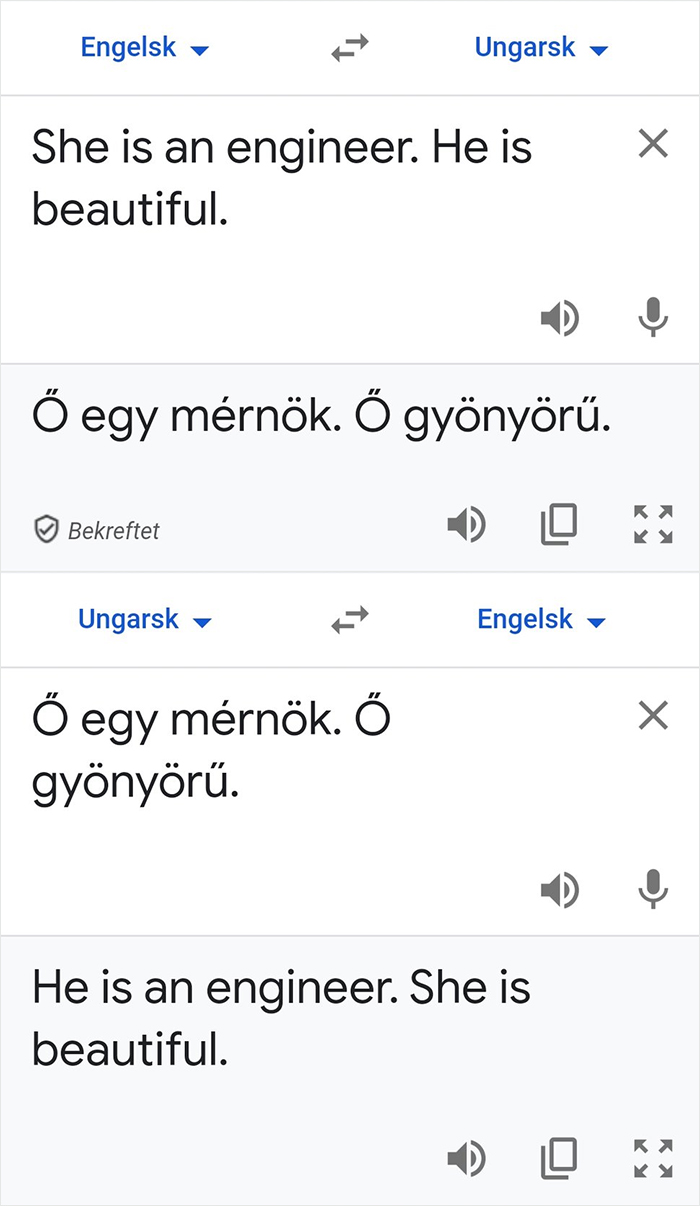

Plenty of languages appear to have the same translation issues that the Twitter users pointed out

Image credits: kaiju_kirju

Image credits: lauraolin

Image credits: lauraolin

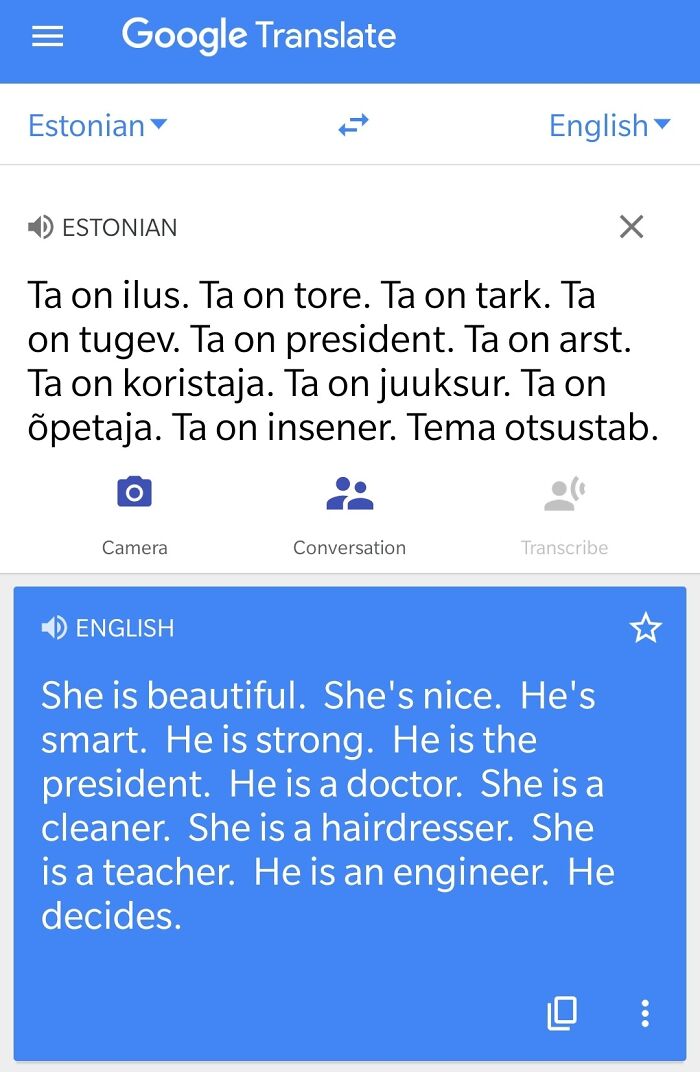

Image credits: sofimi

Image credits: sofimi

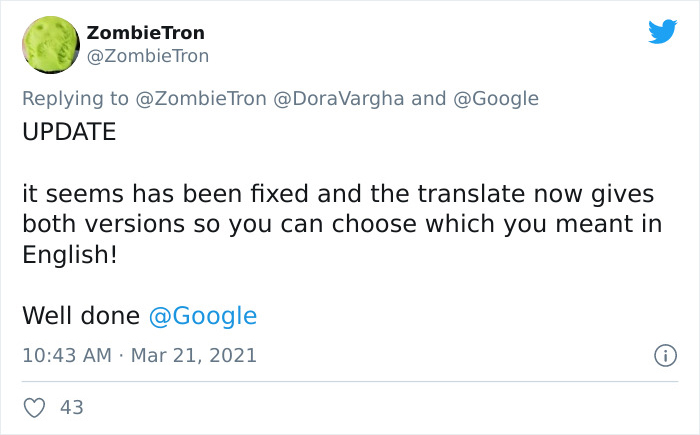

Image credits: ZombieTron

Image credits: ZombieTron

Image credits: SaimDI

Image credits: ZombieTron

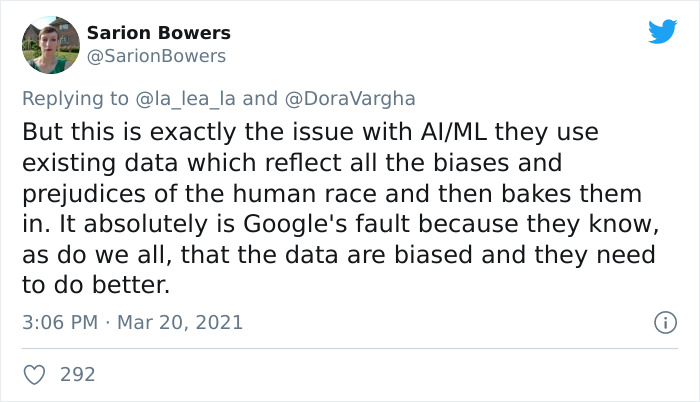

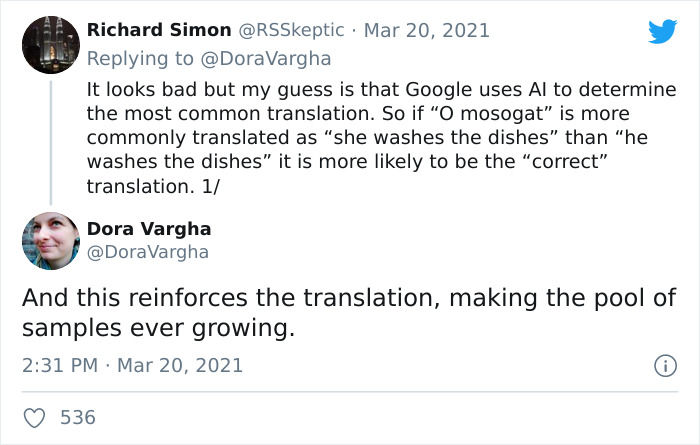

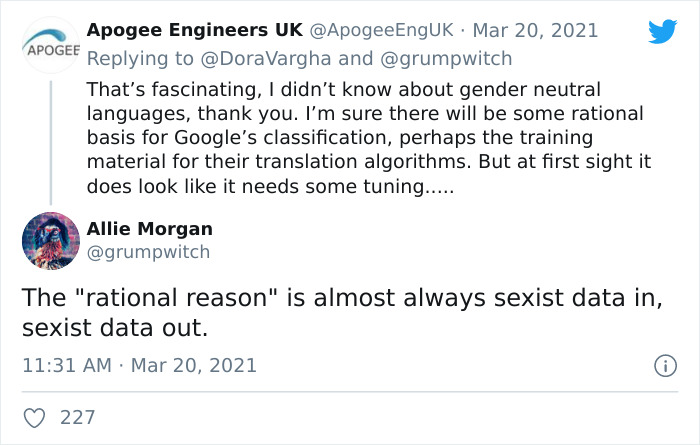

Some internet users believe that Google isn’t as neutral as it should be

Image credits: relevanne

Image credits: relevanne

Image credits: uffishthot

What’s more, Google Translate looks at the broader context to find out which translation is the most relevant. The algorithm works like a mirror that adapts to show us, in its opinion, the most accurate depiction of what we asked for, based on its experience. Or, in other words, it’s a reflection and a compilation of how people all around the world would translate things.

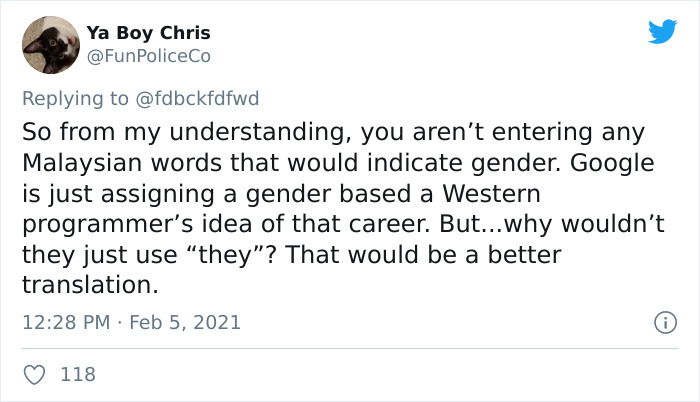

While Google Translate could use the gender-neutral ‘they’ instead of ‘he’ or ‘she,’ this would actually cause a lot of potential confusion just to ensure that the system is being polite. Though ‘they’ can be used as singular, it’s also plural and has very different connotations. For anyone working with Google Translate, this would be a nightmare on a practical level because cases exist in some languages, meaning that the plural use of ‘they’ would change the entire sentence.

Meanwhile, using the singular ‘it’ wouldn’t work either. It might be gender-neutral but it’s also dehumanizing and might even cause more offense. Let’s not forget that even though it’s a program doing the translations, language is a passionate, innate part of the human experience: making mistakes is part of the learning process and we should embrace that. If Google Translate were a student, we ought to be encouraging it to do better and to learn from its mistakes instead of trying to get it canceled for being ‘sexist.’

The issue that some people have with Google Translate could potentially be solved by providing all possible gendered versions of translated sentences so that the user could pick what’s right for them. Or we could walk down the path of randomization and have the system assign random genders to sentences rather than drawing from its millions and millions of real-world examples. It’s either that or changing how the entire world’s translators work so that Google’s algorithm takes these new examples into its learning process.

While some Twitter users were adamant to stomp out sexism wherever they found it, a few others asked whether ranting about Google Translate was the wisest approach, instead of acting against more pressing gender-based discrimination issues.

However, others pointed out that the situation is much more nuanced

Image credits: la_lea_la

There were people on both sides of the fence in this debate

Image credits: SarionBowers

Image credits: DoraVargha

Image credits: grumpwitch

Image credits: feature_envy

Image credits: FunPoliceCo

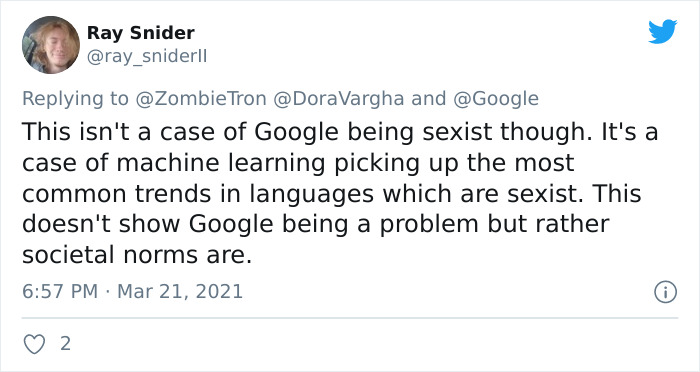

Image credits: ray_sniderII

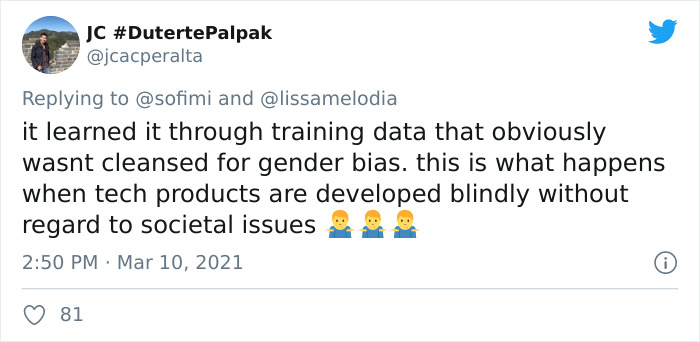

Image credits: jcacperalta

Image credits: EG198

Is Google Translate’s ‘sexism’ something that affects you, dear Pandas? What’s your opinion about some Twitter users calling out the system for assigning genders the way that real-life translators do? How do you think Google Translate could be improved? Should it be changed at all? Share your thoughts and feelings in the comment section below.

24Kviews

Share on FacebookSome accusations of AI bias are nonsense. Automatic driving cars have harder times making out Black people's faces in poor lighting because guess what? It's harder to see Black people's faces in poor lighting. Google Translate, on the other hand, is sloppily done crap. It doesn't translate. It uses existing translations, going back to a corpus of works hundreds of years old, that it finds on the web to tell you what someome most likely meant to say. I like to find out what the original-language meanings of songs and hymns are, but when I paste them into Google translate, it simply replies with the lyrics of the English version, no matter how freely the lyrics were rewritten in English.

Yeah, it's like when my dad searched up "Zamek" and wanted to translate it from English to Polish. He kept getting "Castle" as the translation because it is the most commonly used searched version of "Zamek" in Poland. However, he really wanted "Zipper." Took me a long time to figure out why he was getting mad at the computer... He was searching boat castle for half an hour before he asked for my help. Ended up interviewing a few months later with a company contracted with google (the job position had to do with helping translate accurate results from Polish to English and vice versa) and they were nice enough to explain how the algorithm works to an extent. So rather than blaming the algorithm for being racist, it might just be doing it's job by relaying what is searched the most in regards to using actual gender pronouns. I think the two gender translations should be used though, I think it'd be helpful.

Load More Replies...Might get issues with that tho. They can be used as gender neutral, but it's also used as a plural. These sentences are for one person doing something. He/she does, and they do. This might get confusing because then it looks like the sentence is for when there are several people, and not a sentence with one person. (But thats just my thoughts about it, please correct me if i'm wrong)

Load More Replies...Why is sexist in quotes BP? It IS sexist! And prejudice in every other way.

Its actually not. Other cultures can very traditional and Google is translating what those languages are saying. Stop being so damn offended over everything and just grow a pair.

Load More Replies...I experimented with pets. Original: She is a cat. She is a dog. He is a parrot. He is a fish. Hungarian: Ő egy macska. Ő egy kutya. Ő egy papagáj. Ő egy hal. Google's translation after switching it: He is a cat. He is a dog. He is a parrot. He is a fish. All he's, huh? Next up, I experimented with ownership (though it's kinda weird). Original: He is Microsoft. She is Google. He is Apple. Hungarian: Ő a Microsoft. Ő a Google. Ő az Apple. Google Translate after switching it: He is Microsoft. He's Google. He is Apple. Welp, another all he's. Next, I tried emotions. This one was pretty short. Original: He is angry. She is sad. She is dead. He is sad. Hungarian: Ő mérges. Ő szomorú. Ő halott. Ő szomorú. Google after switching it: She is angry. She is sad. He's dead. She is sad. That was terrible. welp... that's it for now

That last one defied my expectations, especially the "angry": So does the translation default to "women are more emotional and men die sooner"?

Load More Replies...What the hel*?!? I tested it and it is true. I like google a lot less now.

Youre retarded congratulations. I cant complain about females raping males or ill be called a bigot and here you are whining like the c**t you are about words......that make sense.........shut up priviliged female.

Load More Replies...I tried with the Swedish "hen", which is genderless, and every single one became "he"

Remember when you had to actually learn a language, or at the very least, buy a book with common phrases? Pepperidge farm remembers.

What do you mean ‘had to’? There are more multilingual than monolingual people in the world. Or do you know all 108 languages that Google translate supports? That’s so cool!

Load More Replies...Wow a little f****t complaining about words. Its reality dumbass. Go die in a hell hole.

Load More Replies...To fix these biasses you basically need to be as racist as possible. Then use that thinking to get rid of any bias.

Theres alot of gay racists. Simply saying it. Lol never thought gays would be racist but its funny. And i remembered that they're humans not higher beings than me in fact lower beings if anything lol. Yeah so look up gay racism its halirious.

Load More Replies...Well, at least an algorithm is not a thinking person. It's even worse when gender stereotypes are STILL being perpetuated in commercials. It wasn't so long ago when I saw a disinfectant commercial where a guy, supposedly a boss, told a woman to use the product because "If your husband gets sick, who's going to do the presentation at the office?" I mean, WTF? I suppose there was an outrage, because that particular commercial didn't last long on the screen, but imagine the number of people who created it, not noticing how wrong and sexist it was. And there were many more. A commercial for insecticide was directed to "intelligent moms." Seriously? SERIOUSLY? What about dads? Single people? Geesh!

It doesn’t matter if it’s an example of “google being sexist” or not. Google should fix it, regardless of the core reason. Can’t anything just be fücking normal?

Should Google though? The whole point about the AI having learned this is that apparently more often than not this is the correct translation. It's not Google's fault that this is the case, and changing it actually increases the chances of the translation being wrong. As soon as more people actually speak about cooking men and women engineers the problem will solve itself. This is not the problem, this is a symptom.

Load More Replies...Why are women so stupid in this modern era? Why are good women so hard to find?!

Such instruments are particularly weak to gender stereotypes as a result of some languages (like English) usually are not inclined in the direction of gender nouns, whereas others (like German) usually are not. When translating from English to German, translation instruments should determine which gender the English phrases must be categorized as, for instance “cleaner”. Within the overwhelming majority of the instruments of labor correspond to the stereotype, giving desire to the feminine phrase in German. Learn more about Biased Algorithms and New Translations in my blog: https://workingblogs.com/how-we-taught-google-translate-to-cease-being-sexist/

Waste of my and everybody else's time. So much death and suffering in the world and we are upset about the assumption that an engineer is male. How about spending less time bitching and more time on multivariable calculus? Then maybe you ladies can change all that evil inequality.

How about soending less time bitching about this post and more time on multivariable calculus?

Load More Replies...When affecting real change is too much work , get mad at Googles AI and tell yourself youre making a difference.

This can't really be fixed in a lot of cases though. What are they supposed to do?

It's hard to completely change a language, it's not something that can be done overnight - forcing everyone who uses it to change the rules. Also, it can be simpler with some languages (like English, for example), as the grammar isn't very complicated. But other languages like Ukrainian rely on gender a lot (all objects have gender, for example a book is feminine / a table is masculine). Depending on if you choose "he" or "she", the verb is going to appear in a different form.

Load More Replies...Feminists salty. Some bitch is angry for saying He is an engineer and she is beautiful? Im gonna rape her.

Load More Replies..."They" is plural though. When translating (and even in other cases), it's important to know whether something is singular or plural.

Load More Replies...Yes, look at you being bothered by this... you are getting too sensitive.

Load More Replies...There's a gender police??? Do they have a 911 number? Or should I use the non-emergency number?

Load More Replies...Some accusations of AI bias are nonsense. Automatic driving cars have harder times making out Black people's faces in poor lighting because guess what? It's harder to see Black people's faces in poor lighting. Google Translate, on the other hand, is sloppily done crap. It doesn't translate. It uses existing translations, going back to a corpus of works hundreds of years old, that it finds on the web to tell you what someome most likely meant to say. I like to find out what the original-language meanings of songs and hymns are, but when I paste them into Google translate, it simply replies with the lyrics of the English version, no matter how freely the lyrics were rewritten in English.

Yeah, it's like when my dad searched up "Zamek" and wanted to translate it from English to Polish. He kept getting "Castle" as the translation because it is the most commonly used searched version of "Zamek" in Poland. However, he really wanted "Zipper." Took me a long time to figure out why he was getting mad at the computer... He was searching boat castle for half an hour before he asked for my help. Ended up interviewing a few months later with a company contracted with google (the job position had to do with helping translate accurate results from Polish to English and vice versa) and they were nice enough to explain how the algorithm works to an extent. So rather than blaming the algorithm for being racist, it might just be doing it's job by relaying what is searched the most in regards to using actual gender pronouns. I think the two gender translations should be used though, I think it'd be helpful.

Load More Replies...Might get issues with that tho. They can be used as gender neutral, but it's also used as a plural. These sentences are for one person doing something. He/she does, and they do. This might get confusing because then it looks like the sentence is for when there are several people, and not a sentence with one person. (But thats just my thoughts about it, please correct me if i'm wrong)

Load More Replies...Why is sexist in quotes BP? It IS sexist! And prejudice in every other way.

Its actually not. Other cultures can very traditional and Google is translating what those languages are saying. Stop being so damn offended over everything and just grow a pair.

Load More Replies...I experimented with pets. Original: She is a cat. She is a dog. He is a parrot. He is a fish. Hungarian: Ő egy macska. Ő egy kutya. Ő egy papagáj. Ő egy hal. Google's translation after switching it: He is a cat. He is a dog. He is a parrot. He is a fish. All he's, huh? Next up, I experimented with ownership (though it's kinda weird). Original: He is Microsoft. She is Google. He is Apple. Hungarian: Ő a Microsoft. Ő a Google. Ő az Apple. Google Translate after switching it: He is Microsoft. He's Google. He is Apple. Welp, another all he's. Next, I tried emotions. This one was pretty short. Original: He is angry. She is sad. She is dead. He is sad. Hungarian: Ő mérges. Ő szomorú. Ő halott. Ő szomorú. Google after switching it: She is angry. She is sad. He's dead. She is sad. That was terrible. welp... that's it for now

That last one defied my expectations, especially the "angry": So does the translation default to "women are more emotional and men die sooner"?

Load More Replies...What the hel*?!? I tested it and it is true. I like google a lot less now.

Youre retarded congratulations. I cant complain about females raping males or ill be called a bigot and here you are whining like the c**t you are about words......that make sense.........shut up priviliged female.

Load More Replies...I tried with the Swedish "hen", which is genderless, and every single one became "he"

Remember when you had to actually learn a language, or at the very least, buy a book with common phrases? Pepperidge farm remembers.

What do you mean ‘had to’? There are more multilingual than monolingual people in the world. Or do you know all 108 languages that Google translate supports? That’s so cool!

Load More Replies...Wow a little f****t complaining about words. Its reality dumbass. Go die in a hell hole.

Load More Replies...To fix these biasses you basically need to be as racist as possible. Then use that thinking to get rid of any bias.

Theres alot of gay racists. Simply saying it. Lol never thought gays would be racist but its funny. And i remembered that they're humans not higher beings than me in fact lower beings if anything lol. Yeah so look up gay racism its halirious.

Load More Replies...Well, at least an algorithm is not a thinking person. It's even worse when gender stereotypes are STILL being perpetuated in commercials. It wasn't so long ago when I saw a disinfectant commercial where a guy, supposedly a boss, told a woman to use the product because "If your husband gets sick, who's going to do the presentation at the office?" I mean, WTF? I suppose there was an outrage, because that particular commercial didn't last long on the screen, but imagine the number of people who created it, not noticing how wrong and sexist it was. And there were many more. A commercial for insecticide was directed to "intelligent moms." Seriously? SERIOUSLY? What about dads? Single people? Geesh!

It doesn’t matter if it’s an example of “google being sexist” or not. Google should fix it, regardless of the core reason. Can’t anything just be fücking normal?

Should Google though? The whole point about the AI having learned this is that apparently more often than not this is the correct translation. It's not Google's fault that this is the case, and changing it actually increases the chances of the translation being wrong. As soon as more people actually speak about cooking men and women engineers the problem will solve itself. This is not the problem, this is a symptom.

Load More Replies...Why are women so stupid in this modern era? Why are good women so hard to find?!

Such instruments are particularly weak to gender stereotypes as a result of some languages (like English) usually are not inclined in the direction of gender nouns, whereas others (like German) usually are not. When translating from English to German, translation instruments should determine which gender the English phrases must be categorized as, for instance “cleaner”. Within the overwhelming majority of the instruments of labor correspond to the stereotype, giving desire to the feminine phrase in German. Learn more about Biased Algorithms and New Translations in my blog: https://workingblogs.com/how-we-taught-google-translate-to-cease-being-sexist/

Waste of my and everybody else's time. So much death and suffering in the world and we are upset about the assumption that an engineer is male. How about spending less time bitching and more time on multivariable calculus? Then maybe you ladies can change all that evil inequality.

How about soending less time bitching about this post and more time on multivariable calculus?

Load More Replies...When affecting real change is too much work , get mad at Googles AI and tell yourself youre making a difference.

This can't really be fixed in a lot of cases though. What are they supposed to do?

It's hard to completely change a language, it's not something that can be done overnight - forcing everyone who uses it to change the rules. Also, it can be simpler with some languages (like English, for example), as the grammar isn't very complicated. But other languages like Ukrainian rely on gender a lot (all objects have gender, for example a book is feminine / a table is masculine). Depending on if you choose "he" or "she", the verb is going to appear in a different form.

Load More Replies...Feminists salty. Some bitch is angry for saying He is an engineer and she is beautiful? Im gonna rape her.

Load More Replies..."They" is plural though. When translating (and even in other cases), it's important to know whether something is singular or plural.

Load More Replies...Yes, look at you being bothered by this... you are getting too sensitive.

Load More Replies...There's a gender police??? Do they have a 911 number? Or should I use the non-emergency number?

Load More Replies...

100

91