People Are Saying That Twitter’s Photo Preview Algorithm Is Racist, Twitter Agrees And Tries To Fix It

Computers don’t really make mistakes. They look like they do sometimes, but they are just following the code and inputs that they were assigned… and sometimes there are some interesting inputs involved.

Not too long ago, internauts were laughing at Google’s image search algorithms for showing African American doctors when searching for white American doctors.

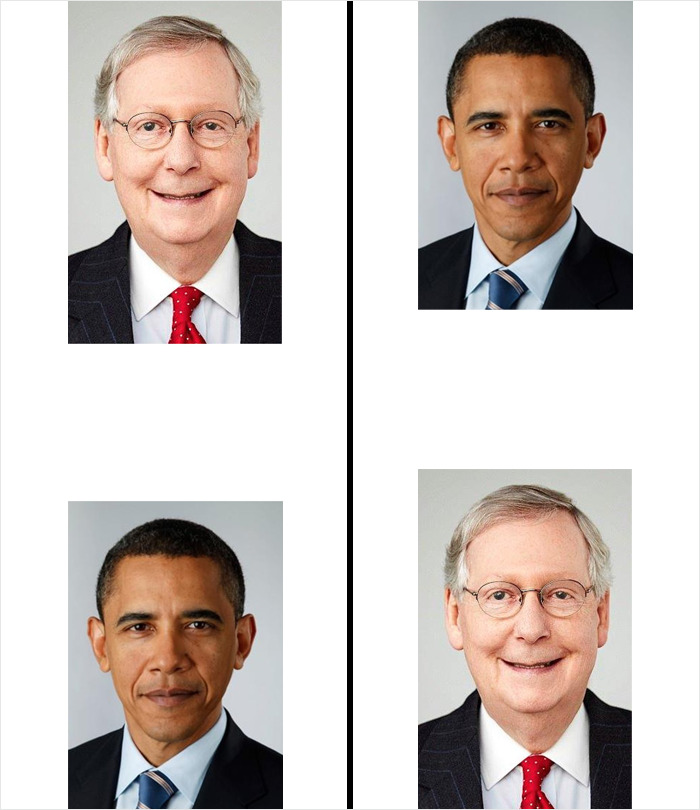

This time around, it’s Twitter and its image cropping algorithms. People have begun noticing how Twitter crops images in a way that gives preference to people with white skin as opposed to those with black skin.

Twitter has an AI that, if you post long and slim photos, it centers on what it thinks is the best part in the tweet

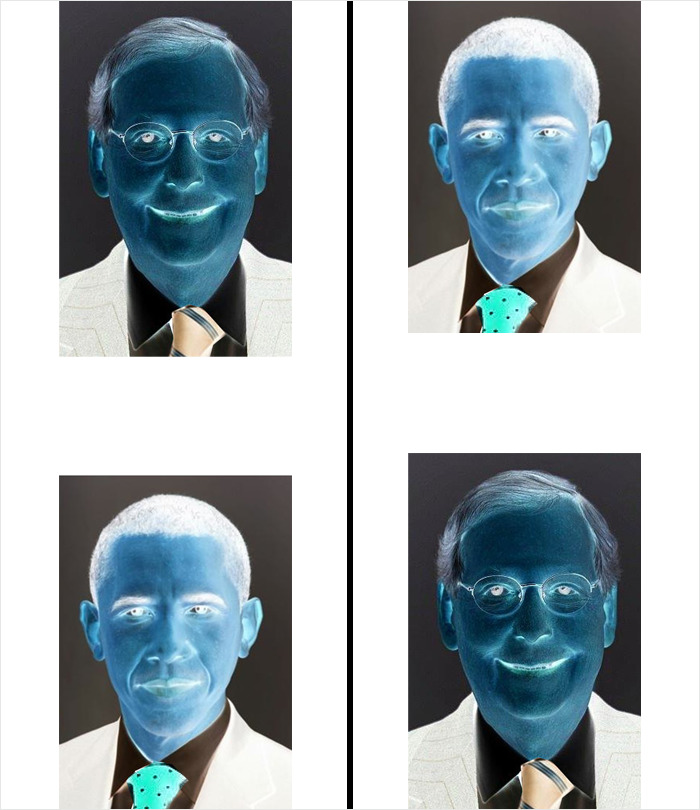

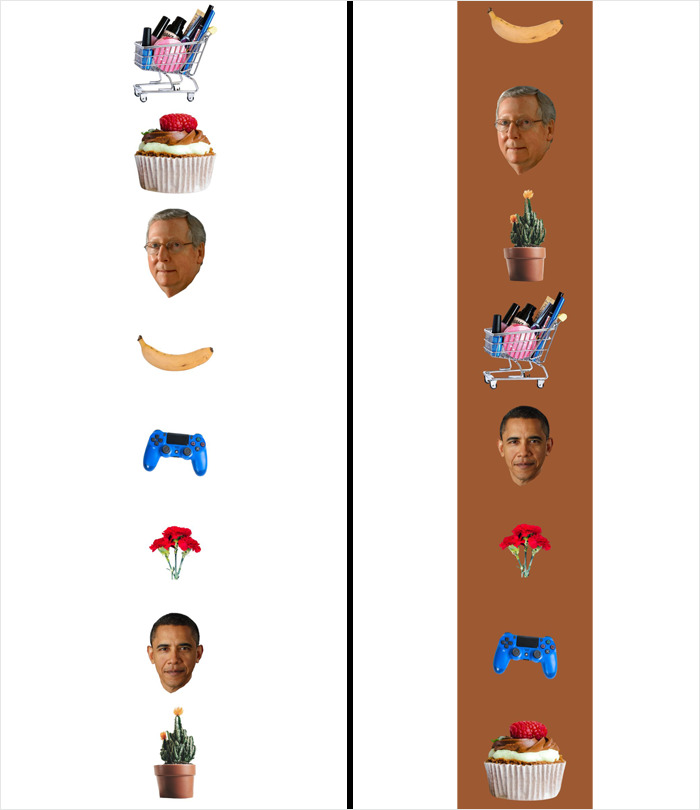

But people noticed that it prefers to center on white faces rather than black ones because the original picture input actually included Barack Obama as well

Image credits: bascule

So, it all started when Twitter user Colin Madland was troubleshooting a colleague on a related matter but on a completely different platform. Turns out, the video conferencing program Zoom seems to get rid of a black person’s head if a custom background is used. It just considers the head a part of the background.

But it wasn’t until he posted some exemplary screenshots to Twitter for further troubleshooting that he understood that Twitter is also up to its neural networking shenanigans. He noticed how, on the mobile version, his long horizontal screenshot was cropped to only include him, and not his colleague.

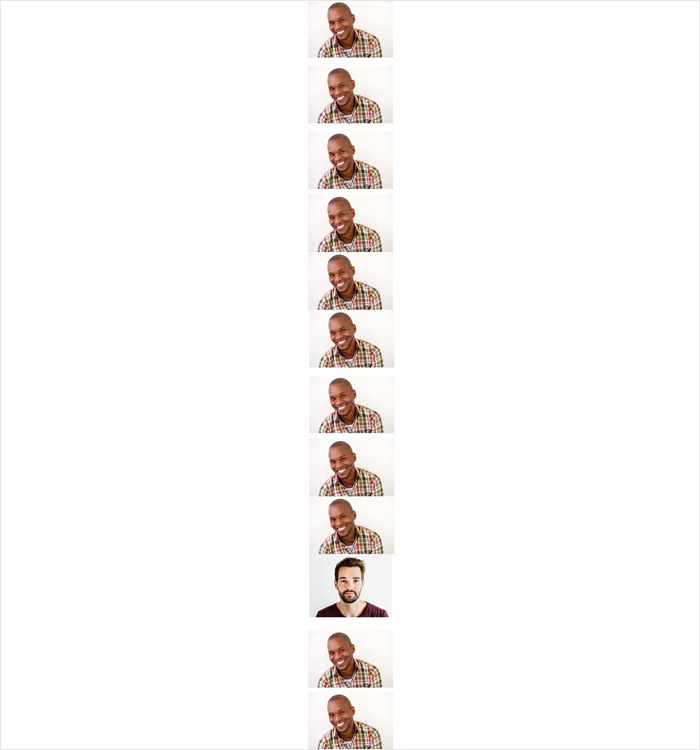

Having noticed this, other people started creating pictures with white and black people in them with odd, elongated ratios to force the cropping algorithm to choose a centering position. And a little bit of unofficial experimenting showed that the system tends to prefer white faces over black ones.

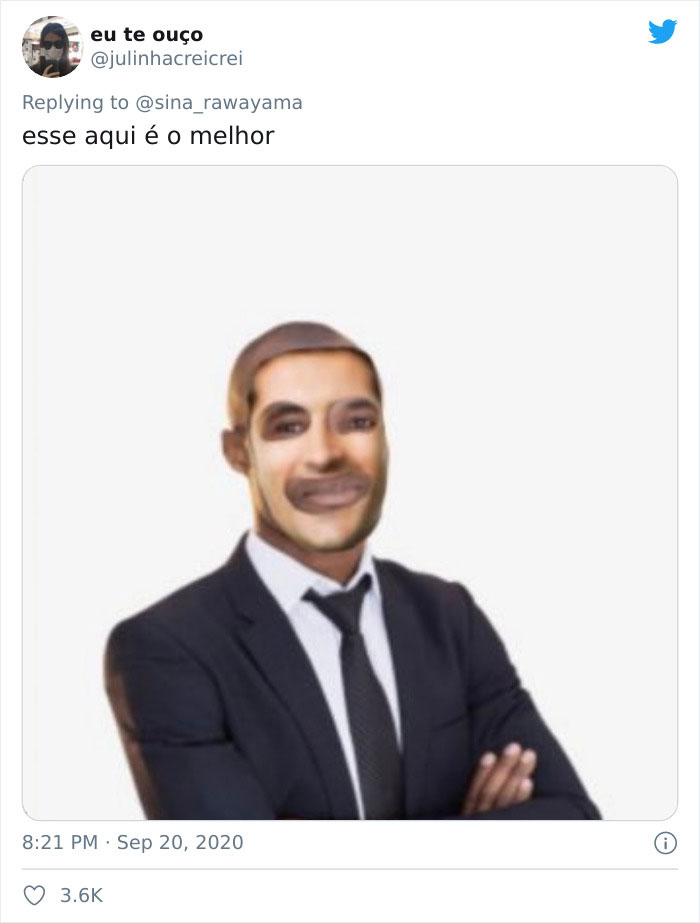

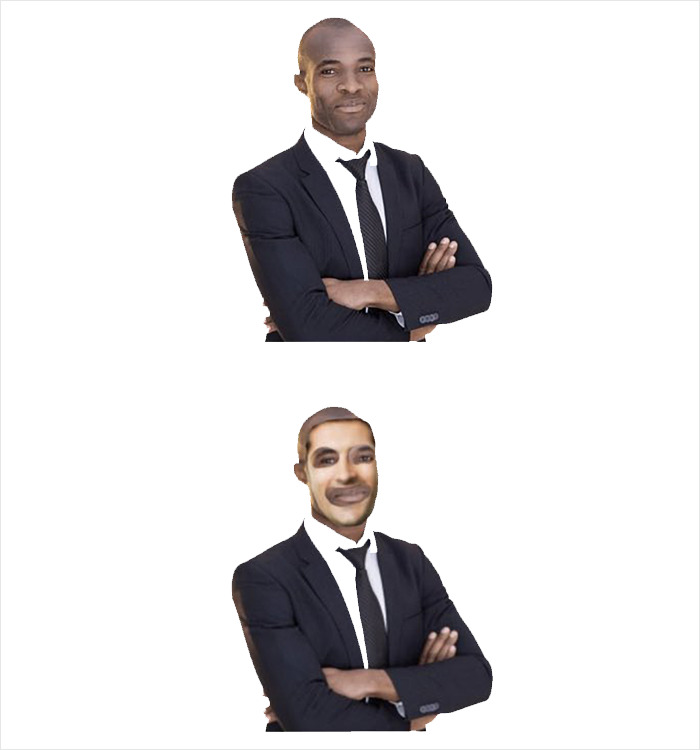

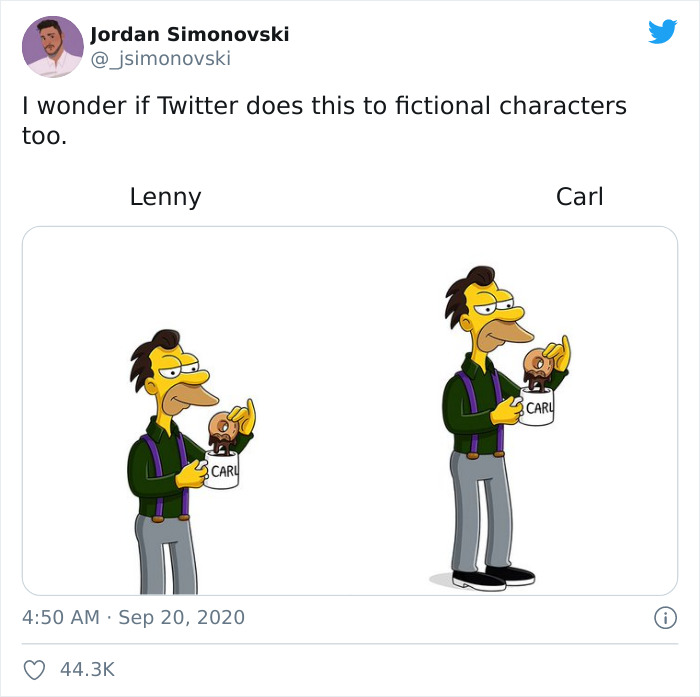

People started testing this out with other photos, and sure enough the tweet centers on the white businessman

… even though the input also included a black businessman too, and the photo position didn’t matter

Image credits: alexhanna

A number of tweeters tested this out with stock photos, politicians, and even cartoons, namely the Simpsons characters Lenny and Carl. More often than not, the photos were cropped in a way that showed a preference for white faces.

Back in early 2018, Twitter identified the problem of tweets showing sets of photos that are off-center. They have thus implemented a mechanism that is supposed to recognize the individual elements in a photo and to center on those instead of on the center. Prior to that, it was all run on facial recognition alone, and while not all photos have faces, they needed to improve.

Results varied to some extent, but most of them, however, seemed to show a racial bias in Twitter’s AI

Image credits: gnomestale

Like many new technologies, it is not perfect. According to NITS studies, it was proven that today’s facial recognition software performs worse with non-white faces with a false identification factor of 10 to 100 for Asian and African American faces. So, there’s room for improvement.

Now, this is an understandable error and it’s not like the algorithm is purely biased, as some photo cropping results showed black faces too. Liz Kelley of the Twitter Communications Team also explained that the neural network was checked for any racial and even gender bias and there was none detected. However, there is clearly a need for more analysis and testing as these results seem biased.

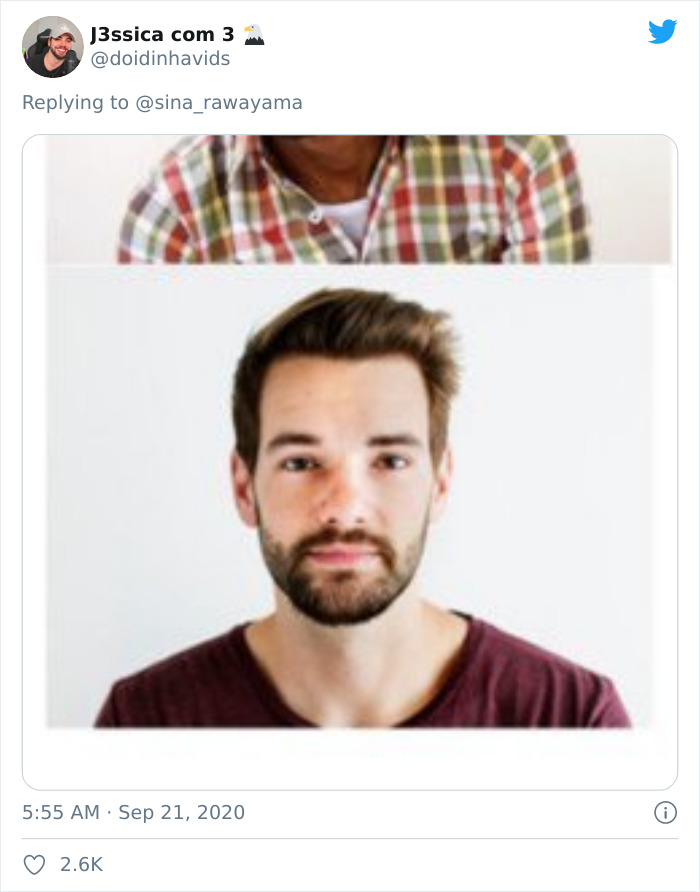

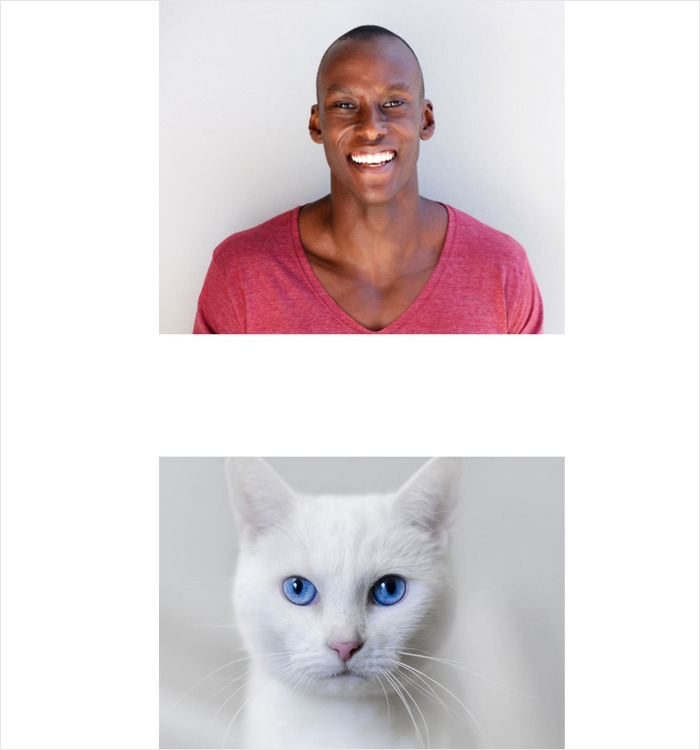

Besides politicians and celebrities, people tested out a bunch of stock photos

All 4 inputs seen below were centered on the white businessman, despite quantity of people

Image credits: sina_rawayama

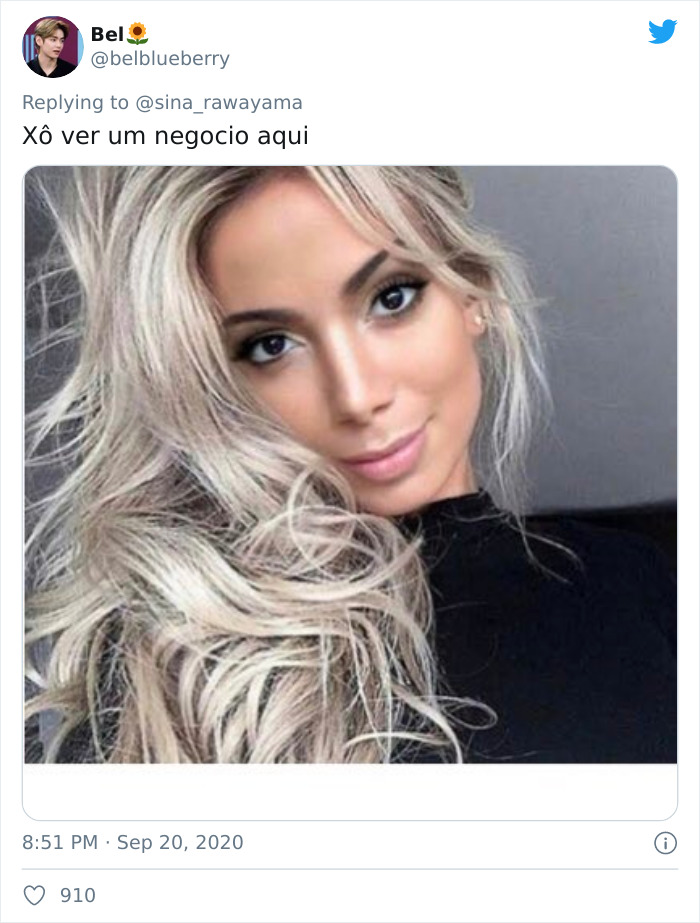

The AI even centered on photos that were poorly edited to contain a white person’s face

Image credits: julinhacreicrei

Quantity was also not a variable in deciding who to center on as the results were the same

Image credits: doidinhavids

CDO of Twitter, Dantley Davis, also said that it is 100% Twitter’s fault and no one should say otherwise, despite a number of people saying that this isn’t done on purpose—it’s an AI, after all—and fault is irrelevant because the focus should always be on fixing the problem.

For now, it is unsure as to what causes the algorithm to do what it does with preferring white faces over black ones. The only explanation is that it tends to look for the most prominent one in the photo.

One person tried out different colored ties and even inverted the colors with varying results

Image credits: bascule

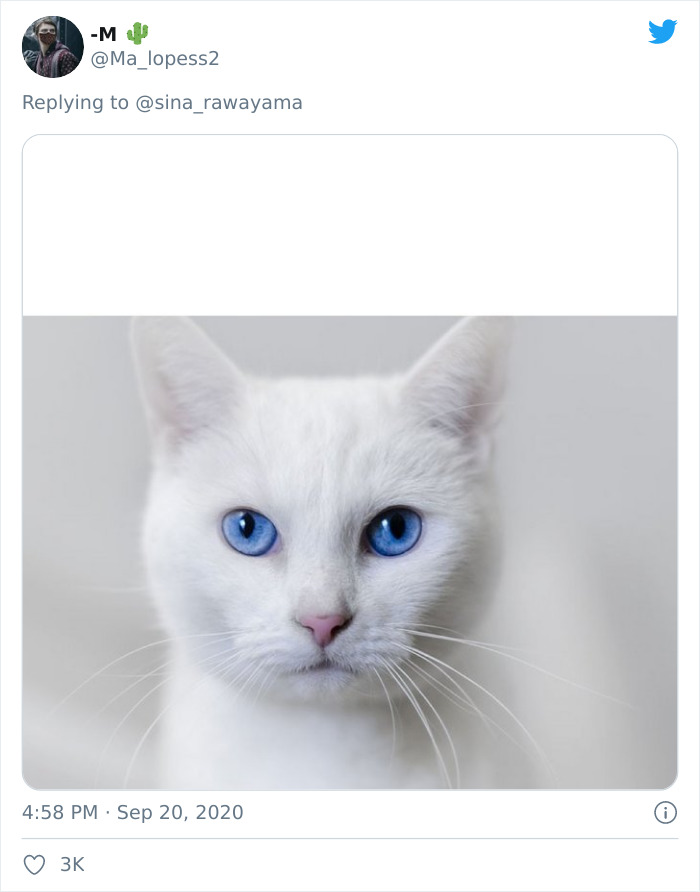

Apparently, there is even a preference with animals just because they are of a bright color palette

Image credits: Ma_lopess2

Twitter is aware of this problem is working on fixing it

Image credits: belblueberry

While Davis explained that the AI might be focusing on other additional variables to determine where to center the photo, others also hint that the aspect ratio, the background or the color scheme might also have something to do with it. This is, however, a non-scientific explanation that is yet to be confirmed or denied.

The issue caused a bit of a ruckus on the internet with individual tweets exemplifying the bias and some even calling the algorithm racist. Said tweets gained thousands upon thousands of likes and retweets, with one particular tweet comparing Mitch McConnell or Barack Obama getting over 185,000 likes.

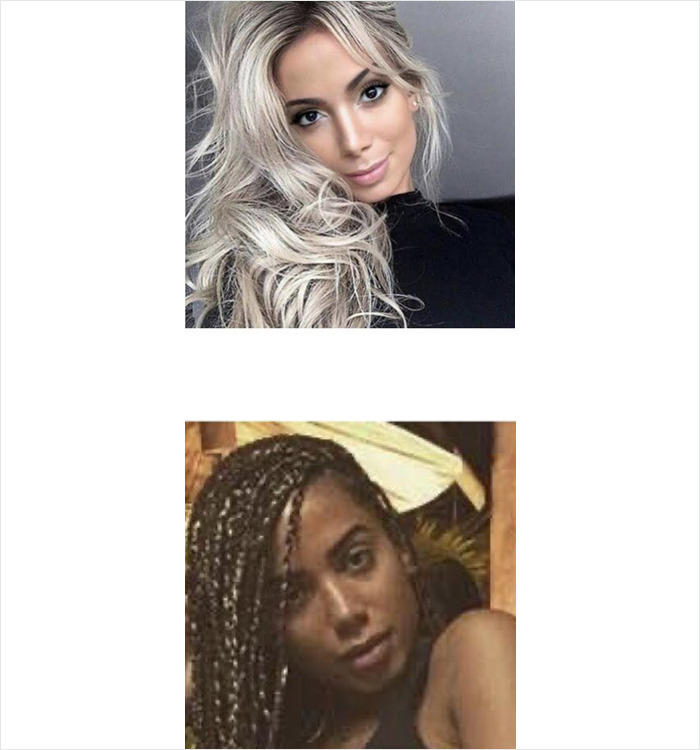

The AI seemed biased even with cartoons as seen with Lenny and Carl from the Simpsons

Image credits: _jsimonovski

Same with dogs

Image credits: MarkEMarkAU

The algorithm wasn’t completely biased as there were results where it preferred black people, as seen in the preview and inputs below

It was speculated that it may be because of the smile or the prominent faces, which are easier for the AI to pick up

Image credits: TLopesVictorM

The only exception to the rule was this free-for-all where it was faces as well as objects

Image credits: mikaozl

What are your thoughts on this? Let us know in the comment section below!

Here are how some tweeters reacted to this

45Kviews

Share on FacebookI'm so confused. I think I understand the written explanation of the problem (Twitter preview detects faces and centers it in the preview, but tends to pick a white face), but I don't understand which image in each set is the input and which is the preview (if that's what the two are)...

Same here. I don't use Twitter, maybe it's clearer for Twitter users? And I also don't understand why you get a downvote for saying you don't understand...

Load More Replies...Okay this is just silly. I feel like we are grasping at reasons to hate each other. Come on be better. It’s exhausting

This! It is just a bug in the AI. But try to report such a bug to Twitter. Would you get more than a "thank you for the report" email, and a resounding silence? Even when there is no malice involved, such a bug report would most likely be just buried to death.

Load More Replies...What the #$&%! Is this post going on about? Start writing better posts, people!

I'm so confused. I think I understand the written explanation of the problem (Twitter preview detects faces and centers it in the preview, but tends to pick a white face), but I don't understand which image in each set is the input and which is the preview (if that's what the two are)...

Same here. I don't use Twitter, maybe it's clearer for Twitter users? And I also don't understand why you get a downvote for saying you don't understand...

Load More Replies...Okay this is just silly. I feel like we are grasping at reasons to hate each other. Come on be better. It’s exhausting

This! It is just a bug in the AI. But try to report such a bug to Twitter. Would you get more than a "thank you for the report" email, and a resounding silence? Even when there is no malice involved, such a bug report would most likely be just buried to death.

Load More Replies...What the #$&%! Is this post going on about? Start writing better posts, people!

20

41